Details

-

Improvement

-

Resolution: Not a Bug

-

Major

-

None

-

Lustre 2.13.0

-

9223372036854775807

Description

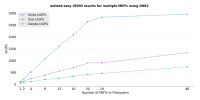

While testing in an environment with a single parent directory following by 1 shared sub directory for all client mdtest ranks, we are observe very little scaling when moving to more than 2 MDTs. See below for 1 million objects per MDT, 0K File Creates:

1 MDTs - 83,948

2 MDTs - 115,929

3 MDTs - 123,186

4 MDTs - 130,846

Stats and deletes are showcasing similar results. It seems to not follow a linear scale but instead plateaus. It would also seem that we are not the only ones to observe this. A recent Cambridge University IO-500 presentation presented a slide with very similar results (fourth from the bottom): https://www.eofs.eu/_media/events/lad19/03_matt_raso-barnett-io500-cambridge.pdf

Attachments

Issue Links

- is related to

-

LU-9436 DNE2 - performance improvement with wide stripping directory

-

- Open

-