Details

-

Bug

-

Resolution: Fixed

-

Major

-

None

-

Lustre 2.10.7

-

None

-

RHEL 7.2.1511, lustre version 2.10.7-1

-

3

-

9223372036854775807

Description

MDS filesystem is full, and we cannot free space on it. It will crash (kernel panic) when trying to delete files.

Apr 13 16:01:50 emds1 kernel: LDISKFS-fs (md0): mounted filesystem with ordered data mode. Opts: user_xattr,errors=remount-ro,no_mbcache,nodelalloc Apr 13 16:01:50 emds1 kernel: LustreError: 11368:0:(osd_handler.c:7131:osd_mount()) echo-MDT0000-osd: failed to set lma on /dev/md0 root inode Apr 13 16:01:50 emds1 kernel: LustreError: 11368:0:(obd_config.c:558:class_setup()) setup echo-MDT0000-osd failed (-30) Apr 13 16:01:50 emds1 kernel: LustreError: 11368:0:(obd_mount.c:203:lustre_start_simple()) echo-MDT0000-osd setup error -30 Apr 13 16:01:50 emds1 kernel: LustreError: 11368:0:(obd_mount_server.c:1848:server_fill_super()) Unable to start osd on /dev/md0: -30 Apr 13 16:01:50 emds1 kernel: LustreError: 11368:0:(obd_mount.c:1582:lustre_fill_super()) Unable to mount (-30) Apr 13 16:02:01 emds1 kernel: LDISKFS-fs (md0): mounted filesystem with ordered data mode. Opts: user_xattr,errors=remount-ro,no_mbcache,nodelalloc Apr 13 16:02:01 emds1 kernel: Lustre: MGS: Connection restored to 8f792be4-fada-1d75-0dbd-ec8601cdce7f (at 0@lo) Apr 13 16:02:01 emds1 kernel: LustreError: 11438:0:(genops.c:478:class_register_device()) echo-OST0000-osc-MDT0000: already exists, won't add Apr 13 16:02:01 emds1 kernel: LustreError: 11438:0:(obd_config.c:1682:class_config_llog_handler()) MGC10.23.22.104@tcp: cfg command failed: rc = -17 Apr 13 16:02:01 emds1 kernel: Lustre: cmd=cf001 0:echo-OST0000-osc-MDT0000 1:osp 2:echo-MDT0000-mdtlov_UUID Apr 13 16:02:01 emds1 kernel: LustreError: 15c-8: MGC10.23.22.104@tcp: The configuration from log 'echo-MDT0000' failed (-17). This may be the result of communication errors between this node and the MGS, a bad configuration, or other errors. See the syslog for more information. Apr 13 16:02:01 emds1 kernel: LustreError: 11380:0:(obd_mount_server.c:1389:server_start_targets()) failed to start server echo-MDT0000: -17 Apr 13 16:02:01 emds1 kernel: LustreError: 11380:0:(obd_mount_server.c:1882:server_fill_super()) Unable to start targets: -17 Apr 13 16:02:01 emds1 kernel: Lustre: Failing over echo-MDT0000 Apr 13 16:02:07 emds1 kernel: Lustre: 11380:0:(client.c:2116:ptlrpc_expire_one_request()) @@@ Request sent has timed out for slow reply: [sent 1586818921/real 1586818921] req@ffff8d748ab38000 x1663898946110400/t0(0) o251->MGC10.23.22.104@tcp@0@lo:26/25 lens 224/224 e 0 to 1 dl 1586818927 ref 2 fl Rpc:XN/0/ffffffff rc 0/-1 Apr 13 16:02:08 emds1 kernel: Lustre: server umount echo-MDT0000 complete Apr 13 16:02:08 emds1 kernel: LustreError: 11380:0:(obd_mount.c:1582:lustre_fill_super()) Unable to mount (-17)

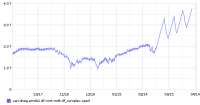

Our directory trees are ridiculously deep and overused for structure of data so this doesn't surprise me. I'm still not sure what changed end of Feb though so we're gonna have to watch this carefully.

Checking this morning, backups are still running and seem to be somewhat stable so I'll let a good backup complete then try and take it offline to run a new e2fsck.

After we've had a successful e2fsck, I'd like to upgrade to 2.12.4 but would it be sensible to run an lfsck prior to doing that, or after to get all the updates/bug-fixes?