Details

-

Bug

-

Resolution: Unresolved

-

Minor

-

None

-

None

-

None

-

3

-

9223372036854775807

Description

Client is getting evicted by MDT as soon as nfsd service started on the client.

client (golf1) kernel version : 3.10.0-1062.1.1.el7_lustre.x86_64

client (golf1) lustre version : lustre-2.12.3-1.el7.x86_64

mds (gmds1) kernel version : 3.10.0-1062.1.1.el7_lustre.x86_64

mds (gmds1) lustre version : lustre-2.12.3-1.el7.x86_64

oss (goss1-goss6) kernel version : 3.10.0-1062.1.1.el7_lustre.x86_64

oss (goss1-goss6) lustre version : lustre-2.12.3-1.el7.x86_64

/etc/exports on golf1 :

/user_data 10.25.0.0/16(fsid=123456789,rw,anonuid=0,insecure,no_subtree_check,insecure_locks,async)

Apr 30 14:03:07 golf1 kernel: LustreError: 11-0: golf-MDT0000-mdc-ffff973bf6409800: operation ldlm_enqueue to node 10.25.22.90@tcp failed: rc = -107 Apr 30 14:03:07 golf1 kernel: Lustre: golf-MDT0000-mdc-ffff973bf6409800: Connection to golf-MDT0000 (at 10.25.22.90@tcp) was lost; in progress operations using this service will wait for recovery to complete Apr 30 14:03:07 golf1 kernel: LustreError: Skipped 8 previous similar messages Apr 30 14:03:07 golf1 kernel: LustreError: 167-0: golf-MDT0000-mdc-ffff973bf6409800: This client was evicted by golf-MDT0000; in progress operations using this service will fail. Apr 30 14:03:07 golf1 kernel: LustreError: 25491:0:(file.c:4339:ll_inode_revalidate_fini()) golf: revalidate FID [0x20004884e:0x16:0x0] error: rc = -5 Apr 30 14:03:07 golf1 kernel: ------------[ cut here ]------------ Apr 30 14:03:07 golf1 kernel: WARNING: CPU: 26 PID: 25600 at fs/nfsd/nfsproc.c:805 nfserrno+0x58/0x70 [nfsd] Apr 30 14:03:07 golf1 kernel: LustreError: 25579:0:(file.c:216:ll_close_inode_openhandle()) golf-clilmv-ffff973bf6409800: inode [0x20004884e:0x15:0x0] mdc close failed: rc = -108 Apr 30 14:03:07 golf1 kernel: ------------[ cut here ]------------ Apr 30 14:03:07 golf1 kernel: ------------[ cut here ]------------ Apr 30 14:03:07 golf1 kernel: nfsd: non-standard errno: -108 Apr 30 14:03:07 golf1 kernel: ------------[ cut here ]------------ Apr 30 14:03:07 golf1 kernel: WARNING: CPU: 54 PID: 25602 at fs/nfsd/nfsproc.c:805 nfserrno+0x58/0x70 [nfsd] Apr 30 14:03:07 golf1 kernel: LustreError: 25579:0:(file.c:216:ll_close_inode_openhandle()) Skipped 2 previous similar messages Apr 30 14:03:07 golf1 kernel: WARNING: CPU: 24 PID: 25601 at fs/nfsd/nfsproc.c:805 nfserrno+0x58/0x70 [nfsd] Apr 30 14:03:07 golf1 kernel: WARNING: CPU: 9 PID: 25505 at fs/nfsd/nfsproc.c:805 nfserrno+0x58/0x70 [nfsd]

So I wonder if reducing amount of mdt locks would at least help you temporarily since the problem is clearly related to parallel cancels.

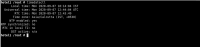

can you please run this on your nfs export node(s)? The setting is not permanent and would reset on node reboot/lustre client remount.