Details

-

Bug

-

Resolution: Fixed

-

Major

-

Lustre 2.4.0

-

None

-

3

-

5771

Description

During Minh's performance test on Opensfs cluster, we found a quite performance degradation.

b2_3 test result

[root@c24 bin]# ./run_mdsrate_create 0: c01 starting at Thu Dec 6 08:48:39 2012 Rate: 26902.88 eff 26902.57 aggr 140.12 avg client creates/sec (total: 192 threads 3840000 creates 192 dirs 1 threads/dir 142.74 secs) 0: c01 finished at Thu Dec 6 08:51:02 2012 [root@c24 bin]# ./run_mdsrate_stat 0: c01 starting at Thu Dec 6 08:51:50 2012 Rate: 169702.53 eff 169703.11 aggr 883.87 avg client stats/sec (total: 192 threads 3840000 stats 192 dirs 1 threads/dir 22.63 secs) 0: c01 finished at Thu Dec 6 08:52:13 2012 [root@c24 bin]# ./run_mdsrate_unlink 0: c01 starting at Thu Dec 6 08:52:28 2012 Rate: 33486.06 eff 33486.74 aggr 174.41 avg client unlinks/sec (total: 192 threads 3840000 unlinks 192 dirs 1 threads/dir 114.67 secs) Warning: only unlinked 3840000 files instead of 20000 0: c01 finished at Thu Dec 6 08:54:23 2012 [root@c24 bin]# ./run_mdsrate_mknod 0: c01 starting at Thu Dec 6 08:54:32 2012 Rate: 52746.29 eff 52745.00 aggr 274.71 avg client mknods/sec (total: 192 threads 3840000 mknods 192 dirs 1 threads/dir 72.80 secs) 0: c01 finished at Thu Dec 6 08:55:45 2012 [root@c24 bin]#

Master test result

[root@c24 bin]# ./run_mdsrate_create 0: c01 starting at Tue Dec 4 21:15:40 2012 Rate: 6031.09 eff 6031.11 aggr 31.41 avg client creates/sec (total: 192 threads 3840000 creates 192 dirs 1 threads/dir 636.70 secs) 0: c01 finished at Tue Dec 4 21:26:17 2012 [root@c24 bin]# ./run_mdsrate_stat 0: c01 starting at Tue Dec 4 21:27:04 2012 Rate: 177962.00 eff 177964.59 aggr 926.90 avg client stats/sec (total: 192 threads 3840000 stats 192 dirs 1 threads/dir 21.58 secs) 0: c01 finished at Tue Dec 4 21:27:26 2012 [root@c24 bin]# ./run_mdsrate_unlink 0: c01 starting at Tue Dec 4 21:29:47 2012 Rate: 8076.06 eff 8076.08 aggr 42.06 avg client unlinks/sec (total: 192 threads 3840000 unlinks 192 dirs 1 threads/dir 475.48 secs) Warning: only unlinked 3840000 files instead of 20000 0: c01 finished at Tue Dec 4 21:37:43 2012 [root@c24 bin]# ./run_mdsrate_mknod 0: c01 starting at Tue Dec 4 21:48:41 2012 Rate: 10430.50 eff 10430.61 aggr 54.33 avg client mknods/sec (total: 192 threads 3840000 mknods 192 dirs 1 threads/dir 368.15 secs) 0: c01 finished at Tue Dec 4 21:54:49 2012

Attachments

- mdtest_create.png

- 95 kB

- mdtest_unlink.png

- 83 kB

Issue Links

Activity

My mistake. I thought there were different kernel series for RHEL 6.3 and 6.4, but this is only true for ldiskfs.

Andreas, this patch is against VFS code, and can support RHEL6.3/6.4 with the same set of code.

James, it will be great if you can help port to SLES11SP2 and FC18 kernels, and IMO you don't need to include this in LU-1812 patch, because this is a performance improvement patch which doesn't affect functionality.

The question I have is do I included this fix with my LU-1812 patch for SLES11 SP2 kernel support or as a separate patch? It depends on if their are plans to land the LU-1812 patch.

This patch was only included into the RHEL6.3 patch series, not RHEL6.4 or SLES11 SP2.

For patched quota, `oprofile -d ...` shows dqput and dqget are contending on dq_list_lock, however to improve this, more code changes are needed.

I've finished performance test against ext4, the test machine has 24 cores, 24G men.

Mount option "usrjquota=aquota.user,grpjquota=aquota.group,jqfmt=vfsv0" is used when testing with quota.

Below is test script:

total=800000 thrno=1 THRMAX=32 while [ $thrno -le $THRMAX ]; do count=$(($total/$thrno)) mpirun -np $thrno -machinefile /tmp/machinefile mdtest -d /mnt/temp/d1 -i 1 -n $count -u -F >> /tmp/md.txt 2>&1 thrno=$((2*$thrno)) done

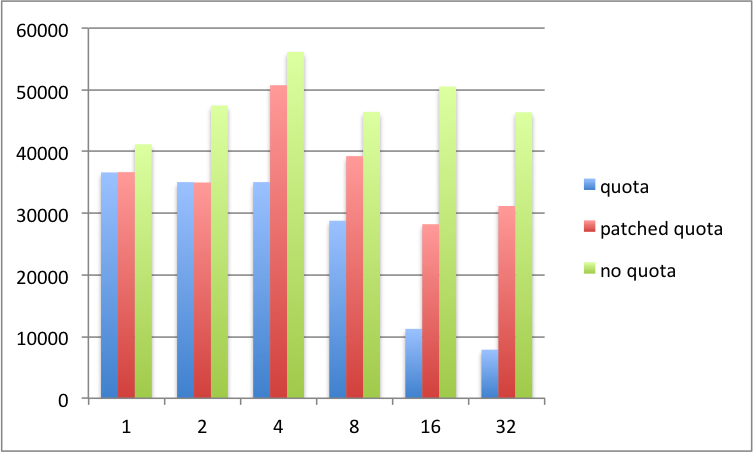

Mdtest create test result (Column is the parallel thread count):

quota patched quota w/o quota 1 36614.457 36661.038 41179.899 2 35046.13 34979.013 47455.064 4 35046.13 50748.669 56157.671 8 28781.597 39255.844 46426.061 16 11251.192 28218.439 50534.734 32 7880.249 31173.627 46366.125

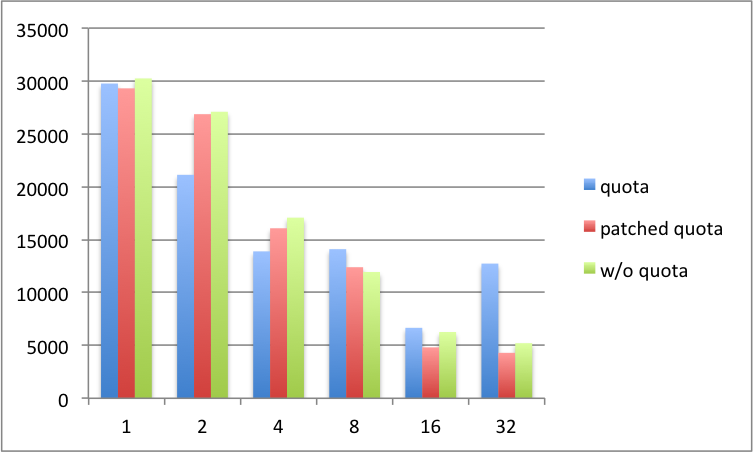

Mdtest unlink test result:

quota patched quota w/o quota 1 29762.146 29307.613 30245.654 2 21131.769 26865.454 27094.563 4 13891.783 16076.384 17079.314 8 14099.972 12393.909 11943.64 16 6662.111 4812.819 6265.979 32 12735.206 4297.173 5210.164

The create test result is as expected, but unlink not. We can see when there are more test threads, test with quota achieves best result, even than w/o quota.

The result of oprofile of mdtest unlink is as below:

quota:

samples % image name app name symbol name 73245 5.0417 vmlinux vmlinux intel_idle 68195 4.6941 vmlinux vmlinux __hrtimer_start_range_ns 44222 3.0439 vmlinux vmlinux schedule 26741 1.8407 vmlinux vmlinux mutex_spin_on_owner 26280 1.8089 vmlinux vmlinux __find_get_block 23680 1.6300 vmlinux vmlinux rwsem_down_failed_common 22958 1.5803 ext4.ko ext4.ko ext4_mark_iloc_dirty 20814 1.4327 vmlinux vmlinux update_curr 19519 1.3436 vmlinux vmlinux rb_erase 18140 1.2486 vmlinux vmlinux thread_return

patched quota:

samples % image name app name symbol name 3235409 50.1659 vmlinux vmlinux dqput 1140972 17.6911 vmlinux vmlinux dqget 347286 5.3848 vmlinux vmlinux mutex_spin_on_owner 278271 4.3147 vmlinux vmlinux dquot_mark_dquot_dirty 277685 4.3056 vmlinux vmlinux dquot_commit 51187 0.7937 vmlinux vmlinux intel_idle 38886 0.6029 vmlinux vmlinux __find_get_block 32483 0.5037 vmlinux vmlinux schedule 30017 0.4654 ext4.ko ext4.ko ext4_mark_iloc_dirty 29618 0.4592 jbd2.ko jbd2.ko jbd2_journal_add_journal_head 29483 0.4571 vmlinux vmlinux mutex_lock

w/o quota:

samples % image name app name symbol name 173301 6.3691 vmlinux vmlinux schedule 150041 5.5142 vmlinux vmlinux __audit_syscall_exit 148352 5.4522 vmlinux vmlinux update_curr 110868 4.0746 libmpi.so.1.0.2 libmpi.so.1.0.2 /usr/lib64/openmpi/lib/libmpi.so.1.0.2 105145 3.8642 libc-2.12.so libc-2.12.so sched_yield 104872 3.8542 vmlinux vmlinux sys_sched_yield 99494 3.6566 mca_btl_sm.so mca_btl_sm.so /usr/lib64/openmpi/lib/openmpi/mca_btl_sm.so 92536 3.4008 vmlinux vmlinux mutex_spin_on_owner 85868 3.1558 vmlinux vmlinux system_call

I don't quite understand the cause yet, maybe removing dqptr_sem causes more process scheduling?

Does this patch need to be applied to the client's kernels? If so the patch needs to be updated for other platforms as well.

Below is the test result for http://review.whamcloud.com/5010:

RHEL6, MDS with 24 cores, 24G men.

master:

[root@fat-intel-2 tests]# for i in `seq 1 5`; do sh llmount.sh >/dev/null 2>&1; tests_str="create destroy" file_count=500000 thrlo=4 mds-survey; sh llmountcleanup.sh > /dev/null 2>&1; done

Fri Feb 15 21:41:30 PST 2013 /usr/bin/mds-survey from fat-intel-2

mdt 1 file 500000 dir 4 thr 4 create 26209.85 [ 0.00,37997.04] destroy 28515.96 [ 0.00,60988.72]

mdt 1 file 500000 dir 4 thr 8 create 18912.57 [ 0.00,27998.18] destroy 21772.84 [ 999.93,33996.46]

mdt 1 file 500000 dir 4 thr 16 create 18270.86 [ 0.00,27998.82] destroy 16040.28 [ 0.00,31997.92]

mdt 1 file 500000 dir 4 thr 32 create 16305.92 [ 0.00,31997.44] destroy 19228.34 [ 0.00,31998.98]

done!

Fri Feb 15 21:47:04 PST 2013 /usr/bin/mds-survey from fat-intel-2

mdt 1 file 500000 dir 4 thr 4 create 26205.63 [ 0.00,38996.06] destroy 29596.33 [ 0.00,72992.48]

mdt 1 file 500000 dir 4 thr 8 create 19632.23 [ 0.00,27997.84] destroy 20835.51 [ 0.00,39996.84]

mdt 1 file 500000 dir 4 thr 16 create 17744.74 [ 0.00,27997.90] destroy 21308.89 [ 0.00,33997.38]

mdt 1 file 500000 dir 4 thr 32 create 15610.01 [ 0.00,28998.09] destroy 11542.69 [ 0.00,31998.40]

done!

Fri Feb 15 21:52:45 PST 2013 /usr/bin/mds-survey from fat-intel-2

mdt 1 file 500000 dir 4 thr 4 create 24109.43 [ 0.00,37996.85] destroy 33815.68 [ 0.00,75990.35]

mdt 1 file 500000 dir 4 thr 8 create 20305.14 [ 0.00,35994.82] destroy 20964.45 [ 0.00,34997.10]

mdt 1 file 500000 dir 4 thr 16 create 17943.26 [ 0.00,27997.40] destroy 14417.99 [ 0.00,31998.88]

mdt 1 file 500000 dir 4 thr 32 create 13365.02 [ 0.00,31997.60] destroy 17411.86 [ 0.00,31998.43]

done!

Fri Feb 15 21:58:44 PST 2013 /usr/bin/mds-survey from fat-intel-2

mdt 1 file 500000 dir 4 thr 4 create 26433.77 [ 0.00,37996.12] destroy 29013.88 [ 0.00,66989.01]

mdt 1 file 500000 dir 4 thr 8 create 20786.59 [ 0.00,29999.25] destroy 15879.62 [ 0.00,33995.75]

mdt 1 file 500000 dir 4 thr 16 create 17596.20 [ 0.00,27997.90] destroy 19394.02 [ 0.00,31998.88]

mdt 1 file 500000 dir 4 thr 32 create 17086.35 [ 0.00,31998.88] destroy 14710.48 [ 0.00,31998.02]

done!

Fri Feb 15 22:04:26 PST 2013 /usr/bin/mds-survey from fat-intel-2

mdt 1 file 500000 dir 4 thr 4 create 23813.65 [ 0.00,36997.34] destroy 34439.20 [ 0.00,67991.09]

mdt 1 file 500000 dir 4 thr 8 create 18209.21 [ 0.00,27997.87] destroy 22394.02 [ 0.00,36996.67]

mdt 1 file 500000 dir 4 thr 16 create 18059.66 [ 0.00,26998.25] destroy 20458.29 [ 0.00,31998.82]

mdt 1 file 500000 dir 4 thr 32 create 13597.78 [ 0.00,31998.50] destroy 17167.29 [ 0.00,31999.01]

master w/o quota:

master w/o quota:

[root@fat-intel-2 tests]# for i in `seq 1 5`; do NOSETUP=y sh llmount.sh >/dev/null 2>&1; tune2fs -O ^quota /dev/sdb1 > /dev/null 2>&1; NOFORMAT=y sh llmount.sh > /dev/null 2>&1; tests_str="create destroy" file_count=500000 thrlo=4 mds-survey; sh llmountcleanup.sh > /dev/null 2>&1; done

Fri Feb 15 22:20:09 PST 2013 /usr/bin/mds-survey from fat-intel-2

mdt 1 file 500000 dir 4 thr 4 create 30366.94 [ 0.00,47995.01] destroy 43404.01 [ 0.00,124985.75]

mdt 1 file 500000 dir 4 thr 8 create 41967.03 [ 0.00,127982.59] destroy 37145.02 [ 0.00,78991.23]

mdt 1 file 500000 dir 4 thr 16 create 40425.22 [ 0.00,119987.76] destroy 16860.10 [ 0.00,56991.45]

mdt 1 file 500000 dir 4 thr 32 create 31995.01 [ 0.00,103991.26] destroy 11203.98 [ 0.00,50994.14]

done!

Fri Feb 15 22:25:02 PST 2013 /usr/bin/mds-survey from fat-intel-2

mdt 1 file 500000 dir 4 thr 4 create 30934.22 [ 0.00,48997.84] destroy 43168.82 [ 0.00,126982.73]

mdt 1 file 500000 dir 4 thr 8 create 48911.42 [ 0.00,129987.78] destroy 19988.51 [ 0.00,49996.20]

mdt 1 file 500000 dir 4 thr 16 create 45156.92 [ 0.00,118988.46] destroy 18769.52 [ 0.00,74989.50]

mdt 1 file 500000 dir 4 thr 32 create 28270.60 [ 0.00,95992.80] destroy 18232.65 [ 0.00,94987.27]

done!

Fri Feb 15 22:29:41 PST 2013 /usr/bin/mds-survey from fat-intel-2

mdt 1 file 500000 dir 4 thr 4 create 30139.03 [ 0.00,48995.93] destroy 50610.31 [ 0.00,118986.08]

mdt 1 file 500000 dir 4 thr 8 create 50995.45 [ 0.00,131979.15] destroy 37575.37 [ 0.00,85987.79]

mdt 1 file 500000 dir 4 thr 16 create 52478.59 [ 0.00,98981.09] destroy 13508.81 [ 0.00,52991.36]

mdt 1 file 500000 dir 4 thr 32 create 26142.72 [ 0.00,95995.01] destroy 21777.04 [ 0.00,100996.57]

done!

Fri Feb 15 22:34:12 PST 2013 /usr/bin/mds-survey from fat-intel-2

mdt 1 file 500000 dir 4 thr 4 create 30747.88 [ 0.00,46996.01] destroy 44731.56 [ 0.00,115981.44]

mdt 1 file 500000 dir 4 thr 8 create 51109.70 [ 0.00,130978.52] destroy 34416.83 [ 0.00,84982.24]

mdt 1 file 500000 dir 4 thr 16 create 49890.86 [ 0.00,117985.96] destroy 14209.59 [ 0.00,56995.04]

mdt 1 file 500000 dir 4 thr 32 create 27091.59 [ 0.00,114989.65] destroy 43223.11 [ 0.00,104982.15]

done!

Fri Feb 15 22:38:37 PST 2013 /usr/bin/mds-survey from fat-intel-2

mdt 1 file 500000 dir 4 thr 4 create 31068.91 [ 0.00,49997.85] destroy 42854.34 [ 0.00,128981.30]

mdt 1 file 500000 dir 4 thr 8 create 46053.50 [ 0.00,131982.05] destroy 41475.42 [ 0.00,89990.91]

mdt 1 file 500000 dir 4 thr 16 create 45316.94 [ 0.00,118992.03] destroy 12968.13 [ 0.00,75987.61]

mdt 1 file 500000 dir 4 thr 32 create 30377.61 [ 0.00,122984.14] destroy 13852.39 [ 0.00,79989.60]

master w/ patched quota:

[root@fat-intel-2 tests]# for i in `seq 1 5`; do sh llmount.sh >/dev/null 2>&1; tests_str="create destroy" file_count=500000 thrlo=4 mds-survey; sh llmountcleanup.sh > /dev/null 2>&1; done

Sun Feb 17 07:11:38 PST 2013 /usr/bin/mds-survey from fat-intel-2.lab.whamcloud.com

mdt 1 file 500000 dir 4 thr 4 create 26283.65 [ 0.00,41997.40] destroy 45303.57 [ 0.00,92987.45]

mdt 1 file 500000 dir 4 thr 8 create 33285.82 [ 0.00,65991.95] destroy 35571.95 [ 0.00,77989.39]

mdt 1 file 500000 dir 4 thr 16 create 30549.32 [ 0.00,56995.78] destroy 27374.45 [ 0.00,68995.03]

mdt 1 file 500000 dir 4 thr 32 create 16176.84 [ 0.00,51996.62] destroy 14717.10 [ 0.00,57994.66]

done!

Sun Feb 17 07:16:26 PST 2013 /usr/bin/mds-survey from fat-intel-2.lab.whamcloud.com

mdt 1 file 500000 dir 4 thr 4 create 26496.66 [ 0.00,40996.64] destroy 44832.99 [ 0.00,97986.09]

mdt 1 file 500000 dir 4 thr 8 create 33672.99 [ 0.00,66996.11] destroy 35580.20 [ 0.00,65993.99]

mdt 1 file 500000 dir 4 thr 16 create 31749.41 [ 0.00,55994.96] destroy 29304.10 [ 0.00,69994.82]

mdt 1 file 500000 dir 4 thr 32 create 22033.01 [ 0.00,56994.98] destroy 9419.67 [ 0.00,43995.07]

done!

Sun Feb 17 07:21:21 PST 2013 /usr/bin/mds-survey from fat-intel-2.lab.whamcloud.com

mdt 1 file 500000 dir 4 thr 4 create 26988.79 [ 0.00,39998.72] destroy 43051.27 [ 0.00,100982.03]

mdt 1 file 500000 dir 4 thr 8 create 33265.27 [ 0.00,65992.15] destroy 34237.19 [ 0.00,78991.63]

mdt 1 file 500000 dir 4 thr 16 create 31354.47 [ 0.00,57996.23] destroy 26779.46 [ 0.00,66996.11]

mdt 1 file 500000 dir 4 thr 32 create 17949.45 [ 0.00,59996.04] destroy 12178.20 [ 0.00,62994.96]

done!

Sun Feb 17 07:26:15 PST 2013 /usr/bin/mds-survey from fat-intel-2.lab.whamcloud.com

mdt 1 file 500000 dir 4 thr 4 create 27104.78 [ 0.00,39998.76] destroy 42821.07 [ 0.00,96990.49]

mdt 1 file 500000 dir 4 thr 8 create 33137.66 [ 0.00,64996.04] destroy 33041.39 [ 0.00,76992.76]

mdt 1 file 500000 dir 4 thr 16 create 30752.19 [ 0.00,56996.30] destroy 27265.47 [ 0.00,67994.63]

mdt 1 file 500000 dir 4 thr 32 create 15830.85 [ 0.00,60996.71] destroy 10042.26 [ 0.00,47993.38]

done!

Sun Feb 17 07:31:22 PST 2013 /usr/bin/mds-survey from fat-intel-2.lab.whamcloud.com

mdt 1 file 500000 dir 4 thr 4 create 27513.04 [ 0.00,40998.57] destroy 39405.62 [ 0.00,97989.32]

mdt 1 file 500000 dir 4 thr 8 create 34868.78 [ 0.00,78996.13] destroy 40266.84 [ 0.00,73996.52]

mdt 1 file 500000 dir 4 thr 16 create 28035.39 [ 0.00,58996.05] destroy 18330.08 [ 0.00,68995.86]

mdt 1 file 500000 dir 4 thr 32 create 22727.89 [ 0.00,56996.58] destroy 10760.47 [ 0.00,41993.53]

2.3:

[root@fat-intel-2 tests]# for i in `seq 1 5`; do sh llmount.sh >/dev/null 2>&1; tests_str="create destroy" file_count=500000 thrlo=4 mds-survey; sh llmountcleanup.sh >/dev/null 2>&1; done

Mon Feb 18 18:37:29 PST 2013 /usr/bin/mds-survey from fat-intel-2.lab.whamcloud.com

mdt 1 file 500000 dir 4 thr 4 create 26145.12 [ 0.00,47997.65] destroy 53906.35 [ 0.00,108985.07]

mdt 1 file 500000 dir 4 thr 8 create 51723.17 [ 0.00,125977.07] destroy 30210.63 [ 0.00,86986.95]

mdt 1 file 500000 dir 4 thr 16 create 47965.56 [ 0.00,117987.02] destroy 15171.14 [ 0.00,70989.64]

mdt 1 file 500000 dir 4 thr 32 create 27712.65 [ 0.00,95991.46] destroy 25103.89 [ 0.00,94985.37]

done!

Mon Feb 18 18:42:00 PST 2013 /usr/bin/mds-survey from fat-intel-2.lab.whamcloud.com

mdt 1 file 500000 dir 4 thr 4 create 31616.35 [ 0.00,47994.00] destroy 46495.53 [ 0.00,114988.27]

mdt 1 file 500000 dir 4 thr 8 create 49424.94 [ 0.00,128977.94] destroy 28846.65 [ 0.00,69988.31]

mdt 1 file 500000 dir 4 thr 16 create 44127.39 [ 0.00,103993.14] destroy 13484.81 [ 0.00,69987.82]

mdt 1 file 500000 dir 4 thr 32 create 27848.28 [ 0.00,97995.39] destroy 27959.58 [ 0.00,96993.40]

done!

Mon Feb 18 18:46:33 PST 2013 /usr/bin/mds-survey from fat-intel-2.lab.whamcloud.com

mdt 1 file 500000 dir 4 thr 4 create 31294.53 [ 0.00,47996.83] destroy 46002.83 [ 0.00,124981.75]

mdt 1 file 500000 dir 4 thr 8 create 49600.28 [ 0.00,127980.80] destroy 39120.42 [ 0.00,83984.29]

mdt 1 file 500000 dir 4 thr 16 create 41215.63 [ 0.00,115980.86] destroy 14951.03 [ 0.00,55992.05]

mdt 1 file 500000 dir 4 thr 32 create 29834.72 [ 0.00,95986.66] destroy 47968.23 [ 0.00,105982.51]

done!

Mon Feb 18 18:50:56 PST 2013 /usr/bin/mds-survey from fat-intel-2.lab.whamcloud.com

mdt 1 file 500000 dir 4 thr 4 create 30903.29 [ 0.00,47995.97] destroy 44842.92 [ 0.00,120987.18]

mdt 1 file 500000 dir 4 thr 8 create 49633.18 [ 0.00,127962.12] destroy 44138.19 [ 0.00,87990.15]

mdt 1 file 500000 dir 4 thr 16 create 48650.44 [ 0.00,116986.08] destroy 21194.31 [ 0.00,83987.49]

mdt 1 file 500000 dir 4 thr 32 create 27149.35 [ 0.00,90995.91] destroy 22780.16 [ 0.00,102984.66]

done!

Mon Feb 18 18:55:13 PST 2013 /usr/bin/mds-survey from fat-intel-2.lab.whamcloud.com

mdt 1 file 500000 dir 4 thr 4 create 30643.97 [ 0.00,46996.29] destroy 44520.51 [ 0.00,115985.04]

mdt 1 file 500000 dir 4 thr 8 create 47911.39 [ 0.00,125981.98] destroy 32561.59 [ 0.00,79991.20]

mdt 1 file 500000 dir 4 thr 16 create 43045.28 [ 0.00,83986.48] destroy 17939.69 [ 0.00,56991.62]

mdt 1 file 500000 dir 4 thr 32 create 26503.95 [ 0.00,95985.41] destroy 31324.31 [ 0.00,97992.16]

done!

Started to look at this patch for both FC18 and SLES11 SP2. For FC18 server support we need several patches to make it build. Because of this I doubt it will make it in the 2.4 release. Now the SLES11 SP2 support works with master. What I was thinking is to break up the

LU-1812patch into the FC18 part and the SLES11SP2 code into this patch. I could add in this fix as well. Would you be okay with that?