Prakesh,

We can't really do any aggressive testing as this system is in use. However, right now it only has 1 primary user who is populating the file system. Especially once the creates grind down to a trickle, we can have the work halt and do some minor things and restart - naturally they're OK with that as the throughput makes it worthwhile. If there's anything simple we could do or report we will, but probably not patch right now as we're working around the issue.

Regarding, why the patches help lustre's workload – while it's interesting, don't take the effort to write that up on my account.

Just for reference or if it helps in any way, here's what we see and are doing -

We have a system with 1 MDS, 12 OSS. Data is being written to this from existing NFS mounted filesytems (NAS basically). There are I think 6 NAS machines on 1GB or channeled dual 1GB ethernet. These flow through 3 lrouter machines with 10GB ethernet to Infiniband and the servers. We can also test on infiniband connected cluster nodes if needed, but when we see the create problem it of course doesn't matter.

What we saw:

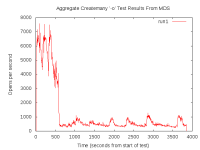

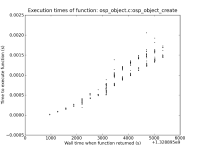

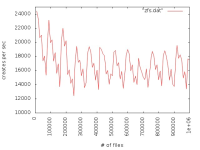

Once our user had everything set and was writing at a reasonable rate, after a week or two file creation went down by a few orders of magnitude, about 2 per second - really bad as noted in this ticket.

On the MDS we noticed txg_sync and arc_adapt particularly busy, but the system cpu mostly idle as also noted above.

To try to at least put the problem off we increased our arc cache settings and restarted, performance was back to normal.

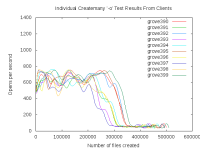

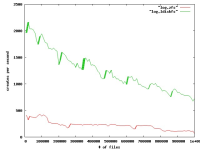

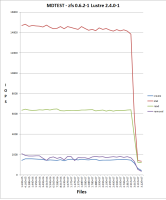

For reference, with mdtest in initial testing we saw around 2000 creates/second. We can't run mdtest right now so we've just been doing simple tests from one node (fdtree is nice by the way), and comparing the same on another zfs backed file system (though not nearly so busy), and a 2.3 ldiskfs based system. This normally gets perhaps 200 to 300 creates per second - all of these file systems are in use, so we can't be too accurate.

After a while performance degraded again, so we stop and restart lustre/zfs on the mds.

I haven't been looking at the OSS's, but just checked and while these do look to have full arc caches, I only see one report in the logs for all servers of slow creates:

Lustre: arcdata-OST001c: Slow creates, 384/512 objects created at a rate of 7/s

So my guess is if these patches help MDS issues more so than OSS, that's at least addressing the more immediate issue for us.

If we had a way to simply completely reset the arc without restarting the lustre and zfs services, that would be useful.

Scott

Improvement

Blocker

LU-2600 lustre metadata performance is very slow on zfs

We have upgraded to zfs-0.6.3-1 and while it looks promising that this issue is solved (or greatly improved) I haven't been able to verify this. I managed to run about 20 Million file writes, and need more than 30M to go past the point where things degraded last time.

I'd like to verify it's improved, but can't do it with that system now.

While testing we did unfortunately see decreased numbers for stat, see

LU-5212Scott