Details

-

Improvement

-

Resolution: Fixed

-

Minor

-

Lustre 2.4.1

-

Seen in two environments. AWS cloud (Robert R.) and a dual-OSS setup (3 SSD per OST) over 2x10 GbE.

-

3

-

11385

Description

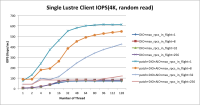

Attached to this Jira are some numbers from the direct IO tests. Write operations only.

It was noticed that setting RPCs in flight to 256 in these tests gives poorer performance. max rpc here is set to 32.

- A sample FIO output:

fio.4k.write.1.23499: (g=0): rw=write, bs=4K-4K/4K-4K/4K-4K, ioengine=sync, iodepth=1 fio-2.1.2 Starting 1 process fio.4k.write.1.23499: Laying out IO file(s) (1 file(s) / 10MB) fio.4k.write.1.23499: (groupid=0, jobs=1): err= 0: pid=10709: Fri Nov 1 11:47:29 2013 write: io=10240KB, bw=2619.7KB/s, iops=654, runt= 3909msec clat (usec): min=579, max=5283, avg=1520.43, stdev=1216.20 lat (usec): min=580, max=5299, avg=1521.37, stdev=1216.22 clat percentiles (usec): | 1.00th=[ 604], 5.00th=[ 652], 10.00th=[ 668], 20.00th=[ 708], | 30.00th=[ 732], 40.00th=[ 756], 50.00th=[ 796], 60.00th=[ 844], | 70.00th=[ 1320], 80.00th=[ 3440], 90.00th=[ 3568], 95.00th=[ 3632], | 99.00th=[ 3824], 99.50th=[ 5024], 99.90th=[ 5216], 99.95th=[ 5280], | 99.99th=[ 5280] bw (KB /s): min= 1224, max= 4366, per=97.64%, avg=2557.14, stdev=1375.64 lat (usec) : 750=37.50%, 1000=30.12% lat (msec) : 2=5.00%, 4=26.76%, 10=0.62% cpu : usr=0.92%, sys=8.70%, ctx=2562, majf=0, minf=25 IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0% submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% issued : total=r=0/w=2560/d=0, short=r=0/w=0/d=0 Run status group 0 (all jobs): WRITE: io=10240KB, aggrb=2619KB/s, minb=2619KB/s, maxb=2619KB/s, mint=3909msec, maxt=3909msec

Attachments

Issue Links

- is duplicated by

-

LU-13786 Take server-side locks for direct i/o

-

- Closed

-

- is related to

-

LU-13900 don't call aio_complete() in lustre upon errors

-

- Resolved

-

-

LU-247 Lustre client slow performance on BG/P IONs: unaligned DIRECT_IO

-

- Resolved

-

-

LU-13697 short io for AIO

-

- Resolved

-

-

LU-9409 Lustre small IO write performance improvement

-

- Resolved

-

-

LU-10278 lfs migrate: make use of direct i/o optional

-

- Resolved

-

-

LU-13801 Enable io_uring interface for Lustre client

-

- Resolved

-

- is related to

-

LU-12687 Fast ENOSPC on direct I/O

-

- Resolved

-

-

LU-13798 Improve direct i/o performance with multiple stripes: Submit all stripes of a DIO and then wait

-

- Resolved

-

- mentioned in

-

Page Loading...

Let's reopen this ticket after we have a more convincible solution for this issue.