Details

-

Bug

-

Resolution: Fixed

-

Critical

-

Lustre 2.4.2

-

3

-

13336

Description

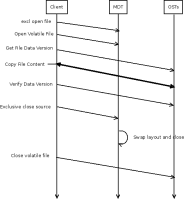

While migrating a file with "lfs migrate", if a process tries to truncate the file, both lfs migrate and truncating processes will deadlock.

This will result in both processes never finishing (unless it is killed) and watchdog messages saying that the processes did not progress for the last XXX seconds.

Here is a reproducer:

[root@lustre24cli ~]# cat reproducer.sh

#!/bin/sh

FS=/test

FILE=${FS}/file

rm -f ${FILE}

# Create a file on OST 1 of size 512M

lfs setstripe -o 1 -c 1 ${FILE}

dd if=/dev/zero of=${FILE} bs=1M count=512

echo 3 > /proc/sys/vm/drop_caches

# Launch a migrate to OST 0 and a bit later open it for write

lfs migrate -i 0 --block ${FILE} &

sleep 2

dd if=/dev/zero of=${FILE} bs=1M count=512

Once the last dd tries to open the file, both lfs and dd processes stay forever with this stack:

lfs stack:

[<ffffffff8128e864>] call_rwsem_down_read_failed+0x14/0x30 [<ffffffffa08d98dd>] ll_file_io_generic+0x29d/0x600 [lustre] [<ffffffffa08d9d7f>] ll_file_aio_read+0x13f/0x2c0 [lustre] [<ffffffffa08da61c>] ll_file_read+0x16c/0x2a0 [lustre] [<ffffffff811896b5>] vfs_read+0xb5/0x1a0 [<ffffffff811897f1>] sys_read+0x51/0x90 [<ffffffff8100b072>] system_call_fastpath+0x16/0x1b [<ffffffffffffffff>] 0xffffffffffffffff

dd stack:

[<ffffffffa03436fe>] cfs_waitq_wait+0xe/0x10 [libcfs] [<ffffffffa04779fa>] cl_lock_state_wait+0x1aa/0x320 [obdclass] [<ffffffffa04781eb>] cl_enqueue_locked+0x15b/0x1f0 [obdclass] [<ffffffffa0478d6e>] cl_lock_request+0x7e/0x270 [obdclass] [<ffffffffa047e00c>] cl_io_lock+0x3cc/0x560 [obdclass] [<ffffffffa047e242>] cl_io_loop+0xa2/0x1b0 [obdclass] [<ffffffffa092a8c8>] cl_setattr_ost+0x208/0x2c0 [lustre] [<ffffffffa08f8a0e>] ll_setattr_raw+0x9ce/0x1000 [lustre] [<ffffffffa08f909b>] ll_setattr+0x5b/0xf0 [lustre] [<ffffffff811a7348>] notify_change+0x168/0x340 [<ffffffff81187074>] do_truncate+0x64/0xa0 [<ffffffff8119bcc1>] do_filp_open+0x861/0xd20 [<ffffffff81185d39>] do_sys_open+0x69/0x140 [<ffffffff81185e50>] sys_open+0x20/0x30 [<ffffffff8100b072>] system_call_fastpath+0x16/0x1b [<ffffffffffffffff>] 0xffffffffffffffff

Attachments

Issue Links

- is related to

-

LU-6785 Interop 2.7.0<->master sanity test_56w: cannot swap layouts: Device or resource busy

-

- Resolved

-

-

LU-5915 racer test_1: FAIL: test_1 failed with 4

-

- Resolved

-

-

LU-7073 racer with OST object migration hangs on cleanup

-

- Resolved

-

- is related to

-

LU-6903 racer file migration crash ASSERTION( lov->lo_type == LLT_RAID0 )

-

- Resolved

-