Details

-

Improvement

-

Resolution: Unresolved

-

Major

-

None

-

None

-

None

-

Argonne's Theta machine is a Cray running lustre: 2.7.3.74

kernel: patchless_client

build: 2.7.3.74

-

9223372036854775807

Description

I'm going to bug Cray about this but Andreas said "we can't fix problems that aren't in Jira" so here I am.

In the old days one would get striping information via an ioctl:

struct lov_user_md *lum; size_t lumsize = sizeof(struct lov_user_md) +LOV_MAX_STRIPE_COUNT * sizeof(struct lov_user_ost_data); lum->lmm_magic = LOV_USER_MAGIC; ret = ioctl( fd, LL_IOC_LOV_GETSTRIPE, (void *)lum );

Then the llapi_ routines showed up:

ret = llapi_file_get_stripe(opt_file, lum);

and even more sophisticated llapi_layout_ routines:

struct llapi_layout *layout; layout = llapi_layout_get_by_fd(fd, 0); llapi_layout_stripe_count_get(layout, &(lum->lmm_stripe_count)); llapi_layout_stripe_size_get(layout, &(lum->lmm_stripe_size)); llapi_layout_ost_index_get(layout, 0, &(lum->lmm_stripe_offset));

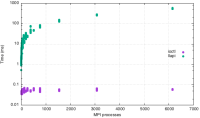

I haven't done a full scaling run, but small scale tests don't look great for the newer interfaces. Average time to get the stripe size for a 2 node 128 process MPI job:

ioctl: 17.2 ms

llapi_file_get_stripe: 142.0 ms

llapi_layout: 2220 ms

Wow! I want to use the new interfaces but these overheads are bonkers.

Attachments

Issue Links

- is related to

-

LU-14316 lfs: using old ioctl(LL_IOC_LOV_GETSTRIPE), use llapi_layout_get_by_path()

-

- Open

-

-

LU-14897 "lfs setstripe/migrate" should check if pool exists for PFL components

-

- Open

-

-

LU-17025 Invalid pool name does not return error

-

- Resolved

-

-

LU-10500 Buffer overflow of 'buffer' due to non null terminated string 'buffer' in llapi_search_tgt

-

- Resolved

-

-

LU-12327 DNE3: add llapi_dir_create_from_xattr() and llapi_dir_default_from_xattr()

-

- Open

-

-

LU-12369 lfs setstripe causes MDS DLM lock callback during create

-

- Open

-

-

LU-14396 cache llapi_search_mounts() result

-

- Open

-

- is related to

-

LU-14645 setstripe cleanup

-

- Resolved

-

-

LU-13693 lfs getstripe should avoid opening regular files

-

- Resolved

-