Details

-

New Feature

-

Resolution: Fixed

-

Minor

-

None

-

None

-

9223372036854775807

Description

Now that NVIDIA has made the official release of GPUDirect Storage, we are able to release the GDS feature integration for Lustre that has been under development and testing in conjunction with NVIDIA for sometime.

This feature provides the following:

- use direct bulk IO with GPU workload

- Select the interface nearest the GPU for optimal performance

- Integrate GPU selection criteria into the LNet multi-rail selection algorithm.

- Handle IO less than 4K in a manner which works with the GPU direct workflow

- Use the memory registration/deregistration mechanism provided by the nvidia-fs driver.

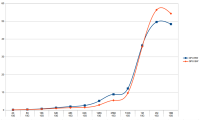

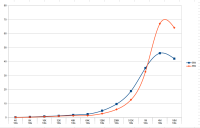

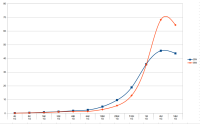

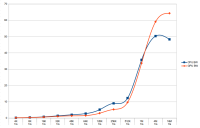

Performance comparison between GPU and CPU workloads attached. Bandwidth in GB/s.

Attachments

Issue Links

- is related to

-

LU-14795 NVidia GDS support in lustre

-

- Closed

-

Activity

"Oleg Drokin <green@whamcloud.com>" merged in patch https://review.whamcloud.com/44111/

Subject: LU-14798 lustre: Support RDMA only pages

Project: fs/lustre-release

Branch: master

Current Patch Set:

Commit: 29eabeb34c5ba2cffdb5353d108ea56e0549665b

"Oleg Drokin <green@whamcloud.com>" merged in patch https://review.whamcloud.com/44110/

Subject: LU-14798 lnet: add LNet GPU Direct Support

Project: fs/lustre-release

Branch: master

Current Patch Set:

Commit: a7a889f77cec3ad44543fd0b33669521e612097d

@lhara - you have different test than i show. My test choose a SINGLE CPU + GPU which near to the IB card. you choose different number GPU's with unknown distance. And what is distance between CPU and GPU? can you please attach an lspci to understand it.

PS. NUMA aware isn't applicable to the GPU <> IB communications. It's based on PCI root complex config. NUMA applicable just to the CPU <> local memory fact.

Due to client (DGX-A100) availability, sorry delay for posting test results of both patch LU-14795 and LU-14798 comparisons.

Here is test results in detail.

Tested Hardware 1 x AI400x (23 x NVMe) 1 x NVIDIA DGX-A100

DGX-A100 supports up to 8 x GPU on DGX-A100 against 8 x IB-HDR200 and 2 x CPU. In my testing, 2 x IB-HDR2000 and 2 and 4 GPU were used in GDS-IO. This is all NUMA-aware (GPU and IB-HDR200 are on same NUMA node) and symmetric configuration.

The test case are "thr=32, mode=0 (GDS-IO), op=1/0 (write/read) and iosize=16KB/1MB" with gdsio below.

GDSIO=/usr/local/cuda-11.4/gds/tools/gdsio TARGET=/lustre/ai400x/client/gdsio mode=$1 op=$2 thr=$3 iosize=$4 $GDSIO -T 60 \ -D $TARGET/md0 -d 0 -n 3 -w $thr -s 1G -i $iosize -x $mode -I $op \ -D $TARGET/md4 -d 4 -n 7 -w $thr -s 1G -i $iosize -x $mode -I $op $GDSIO -T 60 \ -D $TARGET/md0 -d 0 -n 3 -w $thr -s 1G -i $iosize -x $mode -I $op \ -D $TARGET/md1 -d 1 -n 3 -w $thr -s 1G -i $iosize -x $mode -I $op \ -D $TARGET/md4 -d 4 -n 7 -w $thr -s 1G -i $iosize -x $mode -I $op \ -D $TARGET/md5 -d 5 -n 7 -w $thr -s 1G -i $iosize -x $mode -I $op

2 x GPU, 2 x IB-HDR200

iosize=16k iosize=1m Write Read Write Read LU-14795 0.968215 2.3704 35.3331 35.5543 LU-14798 0.979587 2.24632 34.7941 34.0566

4 x GPU, 2 x IB-HDR200

iosize=16k iosize=1m Write Read Write Read LU-14795 1.05208 2.62914 34.8957 37.4645 LU-14798 1.28675 2.53229 36.0412 39.2747

I saw that patch LU-14798 was ~5% slower than LU-14795 for 16K and 1M read in 2 x GPU but I didn't see 23% drops.

However, patch LU-14795 was overall slower than LU-14798 in 4 x GPU, 2 x HDR200 case. (22% slower for 16K write in particular)

shadow please check LU-14795 which i got build fails with latest GDS codes which is part of CUDA 11.4.1. patch LU-14798 was fine to build against CUDA 11.4 and 11.4.1 without any changes though.

results after 10 iterations.

[alyashkov@hpcgate ~]$ for i in `ls log-*16k`; do echo $i; grep "Throughput: 1." $i | awk '{if ($10 == "16(KiB)") {sum += $12;}} END { print sum/10;}'; done

log-cray-16k

1.84928

log-master-16k

1.87858

log-wc-16k

1.54516

[alyashkov@hpcgate ~]$ for i in `ls log-*16k`; do echo $i; grep "Throughput: 0." $i | awk '{if ($10 == "16(KiB)") {sum += $12;}} END { print sum/10;}'; done

log-cray-16k

0.247549

log-master-16k

0.247369

log-wc-16k

0.245084

test script is same for each tree except a directory to module load.

# cat test-wc1.sh #!/bin/bash # echo 1 > /sys/module/nvidia_fs/parameters/dbg_enabled umount /lustre/hdd && lctl net down ; lustre_rmmod pushd /home/hpcd/alyashkov/work/lustre-wc/lustre/tests #PTLDEBUG=-1 SUBSYSTEM=-1 DEBUG_SIZE=1000 NETTYPE=o2ib LOAD=yes bash llmount.sh popd mount -t lustre 192.168.0.210@o2ib:/hdd /lustre/hdd lctl set_param debug=0 subsystem_debug=0 # && lctl set_param debug=-1 subsystem_debug=-1 debug_mb=10000 CUFILE_ENV_PATH_JSON=/home/hpcd/alyashkov/cufile.json for i in $(seq 10); do /usr/local/cuda-11.2/gds/tools/gdsio -f /lustre/hdd/alyashkov/foo -d 7 -w 32 -s 1G -i 16k -x 0 -I 0 -T 120 /usr/local/cuda-11.2/gds/tools/gdsio -f /lustre/hdd/alyashkov/foo -d 0 -w 32 -s 1G -i 16k -x 0 -I 0 -T 120 done # -d 0 -w 4 -s 4G -i 1M -I 1 -x 0 -V #lctl dk > /tmp/llog #dmesg -c > /tmp/n-log #umount /lustre/hdd && lctl net down ; lustre_rmmod

test system -HPe ProLiant XL270d Gen9

PCIe tree

root@ynode02:/home/hpcd/alyashkov# lspci -tv

-+-[0000:ff]-+-08.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D QPI Link 0

| +-08.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D QPI Link 0

| +-09.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D QPI Link 1

| +-09.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D QPI Link 1

| +-0b.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D R3 QPI Link 0/1

| +-0b.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D R3 QPI Link 0/1

| +-0b.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D R3 QPI Link 0/1

| +-0b.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D R3 QPI Link Debug

| +-0c.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0c.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0c.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0c.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0c.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0c.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0c.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0c.7 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0d.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0d.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0d.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0d.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0d.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0d.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0f.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0f.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0f.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0f.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0f.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0f.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0f.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-10.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D R2PCIe Agent

| +-10.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D R2PCIe Agent

| +-10.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Ubox

| +-10.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Ubox

| +-10.7 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Ubox

| +-12.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Home Agent 0

| +-12.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Home Agent 0

| +-12.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Home Agent 0 Debug

| +-12.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Home Agent 1

| +-12.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Home Agent 1

| +-12.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Home Agent 1 Debug

| +-13.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Target Address/Thermal/RAS

| +-13.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Target Address/Thermal/RAS

| +-13.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Channel Target Address Decoder

| +-13.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Channel Target Address Decoder

| +-13.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 0/1 Broadcast

| +-13.7 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Global Broadcast

| +-14.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Channel 0 Thermal Control

| +-14.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Channel 1 Thermal Control

| +-14.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Channel 0 Error

| +-14.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Channel 1 Error

| +-14.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 0/1 Interface

| +-14.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 0/1 Interface

| +-14.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 0/1 Interface

| +-14.7 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 0/1 Interface

| +-16.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Target Address/Thermal/RAS

| +-16.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Target Address/Thermal/RAS

| +-16.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Channel Target Address Decoder

| +-16.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Channel Target Address Decoder

| +-16.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 2/3 Broadcast

| +-16.7 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Global Broadcast

| +-17.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 1 - Channel 0 Thermal Control

| +-17.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 1 - Channel 1 Thermal Control

| +-17.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 1 - Channel 0 Error

| +-17.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 1 - Channel 1 Error

| +-17.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 2/3 Interface

| +-17.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 2/3 Interface

| +-17.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 2/3 Interface

| +-17.7 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 2/3 Interface

| +-1e.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Power Control Unit

| +-1e.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Power Control Unit

| +-1e.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Power Control Unit

| +-1e.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Power Control Unit

| +-1e.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Power Control Unit

| +-1f.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Power Control Unit

| \-1f.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Power Control Unit

+-[0000:80]-+-00.0-[94]--

| +-01.0-[95]--

| +-01.1-[96]--

| +-02.0-[81-8b]----00.0-[82-8b]--+-04.0-[83]----00.0 NVIDIA Corporation GP100GL [Tesla P100 PCIe 16GB]

| | +-08.0-[86]--

| | \-0c.0-[89]----00.0 NVIDIA Corporation GP100GL [Tesla P100 PCIe 16GB]

| +-02.1-[97]--

| +-02.2-[98]--

| +-02.3-[99]--

| +-03.0-[8c-93]----00.0-[8d-93]--+-08.0-[8e]----00.0 NVIDIA Corporation GP100GL [Tesla P100 PCIe 16GB]

| | \-10.0-[91]----00.0 NVIDIA Corporation GP100GL [Tesla P100 PCIe 16GB]

| +-03.1-[9a]--

| +-03.2-[9b]--

| +-03.3-[9c]--

| +-04.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 0

| +-04.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 1

| +-04.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 2

| +-04.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 3

| +-04.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 4

| +-04.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 5

| +-04.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 6

| +-04.7 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 7

| +-05.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Map/VTd_Misc/System Management

| +-05.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D IIO Hot Plug

| +-05.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D IIO RAS/Control Status/Global Errors

| \-05.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D I/O APIC

+-[0000:7f]-+-08.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D QPI Link 0

| +-08.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D QPI Link 0

| +-09.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D QPI Link 1

| +-09.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D QPI Link 1

| +-0b.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D R3 QPI Link 0/1

| +-0b.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D R3 QPI Link 0/1

| +-0b.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D R3 QPI Link 0/1

| +-0b.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D R3 QPI Link Debug

| +-0c.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0c.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0c.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0c.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0c.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0c.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0c.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0c.7 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0d.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0d.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0d.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0d.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0d.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0d.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0f.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0f.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0f.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0f.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0f.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0f.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-0f.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Caching Agent

| +-10.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D R2PCIe Agent

| +-10.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D R2PCIe Agent

| +-10.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Ubox

| +-10.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Ubox

| +-10.7 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Ubox

| +-12.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Home Agent 0

| +-12.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Home Agent 0

| +-12.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Home Agent 0 Debug

| +-12.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Home Agent 1

| +-12.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Home Agent 1

| +-12.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Home Agent 1 Debug

| +-13.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Target Address/Thermal/RAS

| +-13.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Target Address/Thermal/RAS

| +-13.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Channel Target Address Decoder

| +-13.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Channel Target Address Decoder

| +-13.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 0/1 Broadcast

| +-13.7 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Global Broadcast

| +-14.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Channel 0 Thermal Control

| +-14.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Channel 1 Thermal Control

| +-14.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Channel 0 Error

| +-14.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 0 - Channel 1 Error

| +-14.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 0/1 Interface

| +-14.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 0/1 Interface

| +-14.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 0/1 Interface

| +-14.7 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 0/1 Interface

| +-16.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Target Address/Thermal/RAS

| +-16.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Target Address/Thermal/RAS

| +-16.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Channel Target Address Decoder

| +-16.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Channel Target Address Decoder

| +-16.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 2/3 Broadcast

| +-16.7 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Global Broadcast

| +-17.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 1 - Channel 0 Thermal Control

| +-17.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 1 - Channel 1 Thermal Control

| +-17.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 1 - Channel 0 Error

| +-17.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Memory Controller 1 - Channel 1 Error

| +-17.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 2/3 Interface

| +-17.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 2/3 Interface

| +-17.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 2/3 Interface

| +-17.7 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DDRIO Channel 2/3 Interface

| +-1e.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Power Control Unit

| +-1e.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Power Control Unit

| +-1e.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Power Control Unit

| +-1e.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Power Control Unit

| +-1e.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Power Control Unit

| +-1f.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Power Control Unit

| \-1f.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Power Control Unit

\-[0000:00]-+-00.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D DMI2

+-01.0-[16]--

+-01.1-[1c]--

+-02.0-[03-0a]----00.0-[04-0a]--+-08.0-[05]----00.0 NVIDIA Corporation GP100GL [Tesla P100 PCIe 16GB]

| \-10.0-[08]----00.0 NVIDIA Corporation GP100GL [Tesla P100 PCIe 16GB]

+-02.1-[1d]--

+-02.2-[1e]--

+-02.3-[1f]--

+-03.0-[0b-15]----00.0-[0c-15]--+-04.0-[0d]----00.0 NVIDIA Corporation GP100GL [Tesla P100 PCIe 16GB]

| +-08.0-[10]--+-00.0 Mellanox Technologies MT27700 Family [ConnectX-4]

| | \-00.1 Mellanox Technologies MT27700 Family [ConnectX-4]

| \-0c.0-[13]----00.0 NVIDIA Corporation GP100GL [Tesla P100 PCIe 16GB]

+-03.1-[19]--

+-03.2-[1a]--

+-03.3-[1b]--

+-04.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 0

+-04.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 1

+-04.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 2

+-04.3 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 3

+-04.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 4

+-04.5 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 5

+-04.6 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 6

+-04.7 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Crystal Beach DMA Channel 7

+-05.0 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D Map/VTd_Misc/System Management

+-05.1 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D IIO Hot Plug

+-05.2 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D IIO RAS/Control Status/Global Errors

+-05.4 Intel Corporation Xeon E7 v4/Xeon E5 v4/Xeon E3 v4/Xeon D I/O APIC

+-11.0 Intel Corporation C610/X99 series chipset SPSR

+-14.0 Intel Corporation C610/X99 series chipset USB xHCI Host Controller

+-1a.0 Intel Corporation C610/X99 series chipset USB Enhanced Host Controller #2

+-1c.0-[20]--

+-1c.2-[01]--+-00.0 Hewlett-Packard Company Integrated Lights-Out Standard Slave Instrumentation & System Support

| +-00.1 Matrox Electronics Systems Ltd. MGA G200EH

| +-00.2 Hewlett-Packard Company Integrated Lights-Out Standard Management Processor Support and Messaging

| \-00.4 Hewlett-Packard Company Integrated Lights-Out Standard Virtual USB Controller

+-1c.4-[02]--+-00.0 Intel Corporation I350 Gigabit Network Connection

| \-00.1 Intel Corporation I350 Gigabit Network Connection

+-1d.0 Intel Corporation C610/X99 series chipset USB Enhanced Host Controller #1

+-1f.0 Intel Corporation C610/X99 series chipset LPC Controller

+-1f.2 Intel Corporation C610/X99 series chipset 6-Port SATA Controller [AHCI mode]

\-1f.3 Intel Corporation C610/X99 series chipset SMBus Controller

# lscpu Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian Address sizes: 46 bits physical, 48 bits virtual CPU(s): 56 On-line CPU(s) list: 0-55 Thread(s) per core: 2 Core(s) per socket: 14 Socket(s): 2 NUMA node(s): 2 Vendor ID: GenuineIntel CPU family: 6 Model: 79 Model name: Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz Stepping: 1 CPU MHz: 2220.098 BogoMIPS: 4789.01 Virtualization: VT-x L1d cache: 896 KiB L1i cache: 896 KiB L2 cache: 7 MiB L3 cache: 70 MiB NUMA node0 CPU(s): 0-13,28-41 NUMA node1 CPU(s): 14-27,42-55

# uname -a Linux ynode02 5.4.0-77-generic #86-Ubuntu SMP Thu Jun 17 02:35:03 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux

- ofed_info | head -1

MLNX_OFED_LINUX-5.3-1.0.0.1 (OFED-5.3-1.0.0): - ls -d /usr/src/nvidia*

/usr/src/nvidia-460.80 /usr/src/nvidia-fs-2.3.4 /usr/src/nvidia-fs-2.7.49

1 Gb chunk size have a less perf drop - like 5%.

+ bash llmount.sh Loading modules from /home/hpcd/alyashkov/work/lustre-wc/lustre/tests/.. detected 56 online CPUs by sysfs libcfs will create CPU partition based on online CPUs ../lnet/lnet/lnet options: 'networks=o2ib(ibs9f0) accept=all' gss/krb5 is not supported + popd /home/hpcd/alyashkov + mount -t lustre 192.168.0.210@o2ib:/hdd /lustre/hdd + lctl set_param debug=0 subsystem_debug=0 debug=0 subsystem_debug=0 + CUFILE_ENV_PATH_JSON=/home/hpcd/alyashkov/cufile.json + /usr/local/cuda-11.2/gds/tools/gdsio -f /lustre/hdd/alyashkov/foo -d 7 -w 32 -s 1G -i 1M -x 0 -I 0 -T 120 IoType: READ XferType: GPUD Threads: 32 DataSetSize: 27216896/1048576(KiB) IOSize: 1024(KiB) Throughput: 0.217678 GiB/sec, Avg_Latency: 143491.908904 usecs ops: 26579 total_time 119.240755 secs + /usr/local/cuda-11.2/gds/tools/gdsio -f /lustre/hdd/alyashkov/foo -d 0 -w 32 -s 1G -i 1M -x 0 -I 0 -T 120 IoType: READ XferType: GPUD Threads: 32 DataSetSize: 1072263168/1048576(KiB) IOSize: 1024(KiB) Throughput: 8.589992 GiB/sec, Avg_Latency: 3637.855962 usecs ops: 1047132 total_time 119.044332 secs root@ynode02:/home/hpcd/alyashkov# bash test1.sh LNET busy /home/hpcd/alyashkov/work/lustre/lustre/tests /home/hpcd/alyashkov e2label: No such file or directory while trying to open /tmp/lustre-mdt1 Couldn't find valid filesystem superblock. e2label: No such file or directory while trying to open /tmp/lustre-mdt1 Couldn't find valid filesystem superblock. e2label: No such file or directory while trying to open /tmp/lustre-ost1 Couldn't find valid filesystem superblock. Loading modules from /home/hpcd/alyashkov/work/lustre/lustre/tests/.. detected 56 online CPUs by sysfs libcfs will create CPU partition based on online CPUs ../lnet/lnet/lnet options: 'networks=o2ib(ibs9f0) accept=all' enable_experimental_features=1 gss/krb5 is not supported /home/hpcd/alyashkov debug=0 subsystem_debug=0 IoType: READ XferType: GPUD Threads: 32 DataSetSize: 27275264/1048576(KiB) IOSize: 1024(KiB) Throughput: 0.217832 GiB/sec, Avg_Latency: 143344.443230 usecs ops: 26636 total_time 119.411700 secs IoType: READ XferType: GPUD Threads: 32 DataSetSize: 1117265920/1048576(KiB) IOSize: 1024(KiB) Throughput: 8.940439 GiB/sec, Avg_Latency: 3495.253255 usecs ops: 1091080 total_time 119.178470 secs root@ynode02:/home/hpcd/alyashkov#

root@ynode02:/home/hpcd/alyashkov# bash test-wc1.sh LNET busy /home/hpcd/alyashkov/work/lustre-wc/lustre/tests /home/hpcd/alyashkov Loading modules from /home/hpcd/alyashkov/work/lustre-wc/lustre/tests/.. detected 56 online CPUs by sysfs libcfs will create CPU partition based on online CPUs ../lnet/lnet/lnet options: 'networks=o2ib(ibs9f0) accept=all' gss/krb5 is not supported /home/hpcd/alyashkov debug=0 subsystem_debug=0 IoType: READ XferType: GPUD Threads: 32 DataSetSize: 30885168/1048576(KiB) IOSize: 16(KiB) Throughput: 0.244956 GiB/sec, Avg_Latency: 1993.301007 usecs ops: 1930323 total_time 120.243414 secs IoType: READ XferType: GPUD Threads: 32 DataSetSize: 190027184/1048576(KiB) IOSize: 16(KiB) Throughput: 1.517209 GiB/sec, Avg_Latency: 321.826865 usecs ops: 11876699 total_time 119.445644 secs root@ynode02:/home/hpcd/alyashkov# bash test1-1.sh LNET busy /home/hpcd/alyashkov/work/lustre/lustre/tests /home/hpcd/alyashkov e2label: No such file or directory while trying to open /tmp/lustre-mdt1 Couldn't find valid filesystem superblock. e2label: No such file or directory while trying to open /tmp/lustre-mdt1 Couldn't find valid filesystem superblock. e2label: No such file or directory while trying to open /tmp/lustre-ost1 Couldn't find valid filesystem superblock. Loading modules from /home/hpcd/alyashkov/work/lustre/lustre/tests/.. detected 56 online CPUs by sysfs libcfs will create CPU partition based on online CPUs ../lnet/lnet/lnet options: 'networks=o2ib(ibs9f0) accept=all' enable_experimental_features=1 gss/krb5 is not supported /home/hpcd/alyashkov debug=0 subsystem_debug=0 IoType: READ XferType: GPUD Threads: 32 DataSetSize: 30880000/1048576(KiB) IOSize: 16(KiB) Throughput: 0.247379 GiB/sec, Avg_Latency: 1973.783888 usecs ops: 1930000 total_time 119.045719 secs IoType: READ XferType: GPUD Threads: 32 DataSetSize: 236412320/1048576(KiB) IOSize: 16(KiB) Throughput: 1.880670 GiB/sec, Avg_Latency: 259.630924 usecs ops: 14775770 total_time 119.883004 secs

IO load generated by

/usr/local/cuda-11.2/gds/tools/gdsio -f /lustre/hdd/alyashkov/foo -d 7 -w 32 -s 1G -i 16k -x 0 -I 0 -T 120

/usr/local/cuda-11.2/gds/tools/gdsio -f /lustre/hdd/alyashkov/foo -d 0 -w 32 -s 1G -i 16k -x 0 -I 0 -T 120

Host is HP Proliant -> 8 GPU + 2 IB cards. GPU in the two NUMA nodes - GPU0 .. GPU3 in NUMA0, GPU4 - 7 in NUMA1. IB0 (active) NUMA0, IB1 (inactive) NUMA1. Connected to the L300 system. 1 stripe per file.

OS Ubuntu 20.04 + 5.4 kernel + MOFED 5.3.

nvidia-fs from the GDS 1.0 release.

This difference likely because lnet_select_best_ni differences and expected for the WC patch version.

My setup is fully proper numa-ware configuration I mentioned above.

Tested GPU and IB interfaces are located on same NUMA node. see below.

root@dgxa100:~# nvidia-smi Tue Aug 10 00:15:20 2021 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 470.57.02 Driver Version: 470.57.02 CUDA Version: 11.4 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |===============================+======================+======================| | 0 NVIDIA A100-SXM... On | 00000000:07:00.0 Off | 0 | | N/A 27C P0 53W / 400W | 0MiB / 40536MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 1 NVIDIA A100-SXM... On | 00000000:0F:00.0 Off | 0 | | N/A 26C P0 54W / 400W | 0MiB / 40536MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 2 NVIDIA A100-SXM... On | 00000000:47:00.0 Off | 0 | | N/A 27C P0 52W / 400W | 0MiB / 40536MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 3 NVIDIA A100-SXM... On | 00000000:4E:00.0 Off | 0 | | N/A 26C P0 51W / 400W | 0MiB / 40536MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 4 NVIDIA A100-SXM... On | 00000000:87:00.0 Off | 0 | | N/A 31C P0 53W / 400W | 0MiB / 40536MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 5 NVIDIA A100-SXM... On | 00000000:90:00.0 Off | 0 | | N/A 31C P0 58W / 400W | 0MiB / 40536MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 6 NVIDIA A100-SXM... On | 00000000:B7:00.0 Off | 0 | | N/A 31C P0 55W / 400W | 0MiB / 40536MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 7 NVIDIA A100-SXM... On | 00000000:BD:00.0 Off | 0 | | N/A 31C P0 54W / 400W | 0MiB / 40536MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+What I selected GPU index were 0, 1, 4 and 5. (see "-d X" option in my test script which I ran gdsio)

Those GPU's PCI bus id can be identified by "nvidia-smi" command above.

Those PCI device's numa node are 3 or 7 below. That's why "-n 3" or "-n 7" with gdsio.

And two IB interfaces (ibp12s0 and ibp141s0) were configured as LNET.

root@dgxa100:~# lnetctl net show net: - net type: lo local NI(s): - nid: 0@lo status: up - net type: o2ib local NI(s): - nid: 172.16.167.67@o2ib status: up interfaces: 0: ibp12s0 - nid: 172.16.178.67@o2ib status: up interfaces: 0: ibp141s0Those IB interface's PCI bus are 0000:0c:00.0(ibp12s0) and 0000:8d:00.0(ibp141s0).

ibp12s0 is represented by mlx5_0 and mlx5_6 represented ibp141s0 and their numa nodes are also 3 and 7 as below.

So, GPU id 0 and 1 as well as IB interface ibp12s0 (mlx5_0) are located on same numa node 3, and GPU id 4, 5 and IB interface ibp141s0(mlx5_6) are located on numa node7.

In fact, GDX-A100 has 8 x GPU, 8 x IB interfaces and PCI switch between GPU (or IB) <-> CPU in above setting. I've been testing multiple GPUs and IB interfaces, one of GDS-IO benefits, it can eliminate bandwidth limitation on PCI switches and all GPU talks to storage through closest IB interfaces.