Details

-

Bug

-

Resolution: Fixed

-

Minor

-

None

-

None

-

3

-

9223372036854775807

Description

The qmt_pool_add_rem() message started appearing on 2021-08-18 in test output, and there have been a few thousand hits per day:

LustreError: 5659:0:(qmt_pool.c:1390:qmt_pool_add_rem()) add to: can't scratch-QMT0000 scratch-OST0000_UUID pool pool1: rc = -17 LustreError: 5666:0:(qmt_pool.c:1390:qmt_pool_add_rem()) add to: can't scratch-QMT0000 scratch-OST0001_UUID pool pool2: rc = -17 LustreError: 5674:0:(qmt_pool.c:1390:qmt_pool_add_rem()) add to: can't scratch-QMT0000 scratch-OST0002_UUID pool pool3: rc = -17

Patches landed on that day are listed below (no other patches landed after 2021-08-10 or before 2021-08-25):

$ git log --oneline --before 2021-08-22 --after 2021-08-16 d8204f903a (tag: v2_14_54, tag: 2.14.54) New tag 2.14.54 5220160648 LU-14093 lutf: fix build with gcc10 a205334da5 LU-14903 doc: update lfs-setdirstripe man page 1313cad7a1 LU-14899 ldiskfs: Add 5.4.136 mainline kernel support c44afcfb72 LU-12815 socklnd: set conns_per_peer based on link speed 6e30cd0844 LU-14871 kernel: kernel update RHEL7.9 [3.10.0-1160.36.2.el7] 14b8276e06 LU-14865 utils: llog_reader.c printf type mismatch aa5d081237 LU-9859 lnet: fold lprocfs_call_handler functionality into lnet_debugfs_* e423a0bd7a LU-14787 libcfs: Proved an abstraction for AS_EXITING 76c71a167b LU-14775 kernel: kernel update SLES12 SP5 [4.12.14-122.74.1] 67752f6db2 LU-14773 tests: skip check_network() on working node 024f9303bc LU-14668 lnet: Lock primary NID logic 684943e2d0 LU-14668 lnet: peer state to lock primary nid 16321de596 LU-14661 obdclass: Add peer/peer NI when processing llog * ac201366ad LU-14661 lnet: Provide kernel API for adding peers 51350e9b73 LU-14531 osd: serialize access to object vs object destroy a5cbe7883d LU-12815 socklnd: allow dynamic setting of conns_per_peer d13d8158e8 LU-14093 mgc: rework mgc_apply_recover_logs() for gcc10 8dd4488a07 LU-6142 tests: remove iam_ut binary 301d76a711 LU-14876 out: don't connect to busy MDS-MDS export *

It isn't really clear which of those patches started causing this problem, but the message is being printed in different subtests that create/remove OST pools.

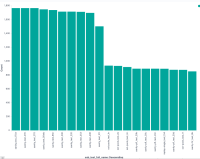

The graph shows occurrences by subtest since 2021-08-18, it looks like this happens in any subtest that is adding a pool:

Attachments

Issue Links

- is related to

-

LU-15043 OST spill pools should not allow spill pool loops

-

- Resolved

-