Details

-

Bug

-

Resolution: Fixed

-

Major

-

Lustre 2.4.0

-

None

-

Hyperion/LLNL

-

3

-

8187

Description

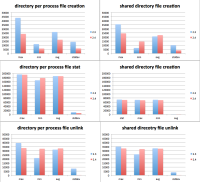

We performed a comparison between 2.3.0, 2.1.5 and current Lustre. We say a regression in metadata performance compared to 2.3.0. Spreadsheet attached.

Attachments

Issue Links

- is blocked by

-

LU-3396 Disable quota by default for 2.4

-

- Closed

-