Details

-

Bug

-

Resolution: Fixed

-

Major

-

Lustre 2.4.0

-

None

-

Hyperion/LLNL

-

3

-

8187

Description

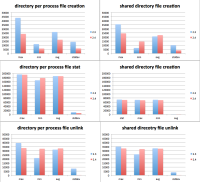

We performed a comparison between 2.3.0, 2.1.5 and current Lustre. We say a regression in metadata performance compared to 2.3.0. Spreadsheet attached.

Attachments

Issue Links

- is blocked by

-

LU-3396 Disable quota by default for 2.4

-

- Closed

-

This is a previously known problem discussed in

LU-2442. The "on-by-default" quota accounting introduced serialization in the quota layer that broke the SMP scaling optimizations done in the 2.3 code. This wasn't fixed until v2_3_63_0-35-g6df197d (http://review.whamcloud.com/5010), so this will hide any regressions in the metadata performance when testing on a faster system, unless the quota feature is disabled on the MDS (tune2fs -O ^quota /dev/mdt).It may well be that there is still a performance impact from the "on-by-default" quota accounting, which is worthwhile to test before trying to find some other cause for this regression.