Details

-

Bug

-

Resolution: Fixed

-

Major

-

Lustre 2.4.0

-

None

-

Hyperion/LLNL

-

3

-

8187

Description

We performed a comparison between 2.3.0, 2.1.5 and current Lustre. We say a regression in metadata performance compared to 2.3.0. Spreadsheet attached.

Attachments

Issue Links

- is blocked by

-

LU-3396 Disable quota by default for 2.4

-

- Closed

-

Activity

Instead of eliminate the global locks entirely, maybe a small fix in dquot_initialize() could relieve the contenion caused by dqget()/dqput(): In dquot_initialize(), we'd call dqget() only when i_dquot not initialized, which can avoid 2 pair of dqget()/dqput() in most case. I'll propose a patch soon.

dqget()/dqput() is mainly to get/drop reference on the in-memory per-id data of dquot, and it acquires global locks like lock dq_list_lock & dq_state_lock, (since it will lookup the dquot list and do some state checking) so contention on those global locks could be severe in the test case. If we can replace them with RCU or read/write lock, things will be better.

I heard from Lai that there were some old patches which tried to remove those global locks, but it didn't gain much interest of community and not reviewed. Lai, could you comment on this?

Regarding the quota record commit (mark_dquot_dirty() -> ext4_mark_dquot_dirty() -> ext4_write_dquot() -> dquot_commit(), which should happen along with each transaction), it does require global locks: dqio_mutex & dq_list_lock, but surprisingly, I didn't see it in the top samples of oprofile, it might just because the dqget()/dqput() calls are much more than dquot commit calls? Once we resolved the bottleneck in dqget()/dqput(), the contention in dquot commit could probably come to light.

I find it strange that dqget() is called 2M times, but it only looks like 20k blocks are being allocated (based on the ldiskfs an jbd2 call counts). Before trying to optimize the speed of that function, it is probably better to reduce the number of times it is called?

It is also a case where the same quota entry is being accessed for every call (same UID and GID each time), so I wonder if that common case could be optimized in some way?

Are any of these issues fixed in the original quota patches?

Unfortunately, since all of the threads are contending to update the same record, there isn't an easy way to reduce contention. The only thing I can think of is to have a journal pre-commit callback that does only a single quota update to disk per transaction, and uses percpu counters for the per-quota-per-transaction updates in memory. That would certainly avoid contention, and is no less correct in the face of a crash. No idea how easy that would be to implement.

Look closer into the dqget()/dqput(), I realized that there is still quite a few global locks in quota code: dq_list_lock, dq_state_lock, dq_data_lock. The fix of LU-2442 only removes the global lock of dqptr_sem, which has the most significant impact on performace. Removing all of the quota global locks requires lots of changes in VFS code, that isn't a small project, maybe we should open a new project for further release?

From Minh's result we can see: because of quota file updating, when testing 256 threads over 256 directories (1 thread per directory, no contention on parent directory updating), create/unlink of w/o quota is faster than create/unlink with quota on. I think the oprofile data confirms it:

Counted CPU_CLK_UNHALTED events (Clock cycles when not halted) with a unit mask of 0x00 (No unit mask) count 100000 samples % image name app name symbol name 2276160 46.2251 vmlinux vmlinux dqput 963873 19.5747 vmlinux vmlinux dqget 335277 6.8089 ldiskfs ldiskfs /ldiskfs 258028 5.2401 vmlinux vmlinux dquot_mark_dquot_dirty 110819 2.2506 osd_ldiskfs osd_ldiskfs /osd_ldiskfs 76925 1.5622 obdclass obdclass /obdclass 58193 1.1818 mdd mdd /mdd 41931 0.8516 vmlinux vmlinux __find_get_block 32408 0.6582 lod lod /lod 20711 0.4206 jbd2.ko jbd2.ko jbd2_journal_add_journal_head 18598 0.3777 jbd2.ko jbd2.ko do_get_write_access 18579 0.3773 vmlinux vmlinux __find_get_block_slow 18364 0.3729 libcfs libcfs /libcfs 17833 0.3622 oprofiled oprofiled /usr/bin/oprofiled 17472 0.3548 vmlinux vmlinux mutex_lock

I'm not sure if we can still improve the performance (with quota) further in this respect, because single quota file updating can always be the bottleneck.

Here is some info about turn off quota:

quota on (default)

[root@mds03 lu3305]# file_count=250000 thrlo=256 thrhi=256 /usr/bin/mds-survey

Wed May 22 13:09:18 PDT 2013 /usr/bin/mds-survey from mds03

mdt 1 file 250000 dir 256 thr 256 create 44082.00 [44082.00,44082.00] lookup 2355576.51 [2355576.51,2355576.51] md_getattr 1223878.40 [1223878.40,1223878.40] setxattr 29649.06 [29649.06,29649.06] destroy 46921.25 [46921.25,46921.25]

done!

[root@mds03 lu3305]# file_count=250000 thrlo=256 thrhi=256 /usr/bin/mds-survey

Wed May 22 13:10:48 PDT 2013 /usr/bin/mds-survey from mds03

mdt 1 file 250000 dir 256 thr 256 create 44897.20 [44897.20,44897.20] lookup 2659938.47 [2659938.47,2659938.47] md_getattr 1321129.64 [1321129.64,1321129.64] setxattr 57581.92 [57581.92,57581.92] destroy 35684.58 [35684.58,35684.58]

done!

[root@mds03 lu3305]# file_count=250000 thrlo=256 thrhi=256 /usr/bin/mds-survey

Wed May 22 13:11:32 PDT 2013 /usr/bin/mds-survey from mds03

mdt 1 file 250000 dir 256 thr 256 create 43014.95 [43014.95,43014.95] lookup 2802301.45 [2802301.45,2802301.45] md_getattr 1348641.14 [1348641.14,1348641.14] setxattr 32394.97 [32394.97,32394.97] destroy 39988.76 [39988.76,39988.76]

done!

[root@mds03 lu3305]# file_count=250000 thrlo=256 thrhi=256 /usr/bin/mds-survey

Wed May 22 13:12:10 PDT 2013 /usr/bin/mds-survey from mds03

mdt 1 file 250000 dir 256 thr 256 create 42977.31 [42977.31,42977.31] lookup 2828152.94 [2828152.94,2828152.94] md_getattr 1357190.19 [1357190.19,1357190.19] setxattr 34235.71 [34235.71,34235.71] destroy 48435.62 [48435.62,48435.62]

done!

[root@mds03 lu3305]# file_count=250000 thrlo=256 thrhi=256 /usr/bin/mds-survey

Wed May 22 13:12:53 PDT 2013 /usr/bin/mds-survey from mds03

mdt 1 file 250000 dir 256 thr 256 create 42984.65 [42984.65,42984.65] lookup 2782484.74 [2782484.74,2782484.74] md_getattr 1344989.45 [1344989.45,1344989.45] setxattr 58604.47 [58604.47,58604.47] destroy 34678.90 [34678.90,34678.90]

done!

quota off via tuners -O^quota <dev>

[root@mds03 lu3305]# file_count=250000 thrlo=256 thrhi=256 /usr/bin/mds-survey

Wed May 22 13:16:30 PDT 2013 /usr/bin/mds-survey from mds03

mdt 1 file 250000 dir 256 thr 256 create 57635.67 [57635.67,57635.67] lookup 2949092.93 [2949092.93,2949092.93] md_getattr 1439917.94 [1439917.94,1439917.94] setxattr 59857.89 [59857.89,59857.89] destroy 58406.76 [58406.76,58406.76]

done!

[root@mds03 lu3305]# file_count=250000 thrlo=256 thrhi=256 /usr/bin/mds-survey

Wed May 22 13:16:53 PDT 2013 /usr/bin/mds-survey from mds03

mdt 1 file 250000 dir 256 thr 256 create 57729.97 [57729.97,57729.97] lookup 2745549.65 [2745549.65,2745549.65] md_getattr 1450702.84 [1450702.84,1450702.84] setxattr 31255.98 [31255.98,31255.98] destroy 71909.76 [71909.76,71909.76]

done!

[root@mds03 lu3305]# file_count=250000 thrlo=256 thrhi=256 /usr/bin/mds-survey

Wed May 22 13:17:16 PDT 2013 /usr/bin/mds-survey from mds03

mdt 1 file 250000 dir 256 thr 256 create 57145.08 [57145.08,57145.08] lookup 2610389.07 [2610389.07,2610389.07] md_getattr 1456521.11 [1456521.11,1456521.11] setxattr 31354.29 [31354.29,31354.29] destroy 75608.29 [75608.29,75608.29]

done!

[root@mds03 lu3305]# file_count=250000 thrlo=256 thrhi=256 /usr/bin/mds-survey

Wed May 22 13:17:55 PDT 2013 /usr/bin/mds-survey from mds03

mdt 1 file 250000 dir 256 thr 256 create 53349.04 [53349.04,53349.04] lookup 2678071.11 [2678071.11,2678071.11] md_getattr 1390181.88 [1390181.88,1390181.88] setxattr 34123.68 [34123.68,34123.68] destroy 148934.27 [148934.27,148934.27]

done!

[root@mds03 lu3305]# file_count=250000 thrlo=256 thrhi=256 /usr/bin/mds-survey

Wed May 22 13:18:31 PDT 2013 /usr/bin/mds-survey from mds03

mdt 1 file 250000 dir 256 thr 256 create 54390.24 [54390.24,54390.24] lookup 2724295.09 [2724295.09,2724295.09] md_getattr 1428483.22 [1428483.22,1428483.22] setxattr 34446.16 [34446.16,34446.16] destroy 154758.66 [154758.66,154758.66]

done!

oprofile data for mds-survey run:

[root@mds03 lu3305]# file_count=250000 thrlo=256 thrhi=256 /usr/bin/mds-survey

Wed May 22 09:45:01 PDT 2013 /usr/bin/mds-survey from mds03

mdt 1 file 250000 dir 256 thr 256 create 39545.05 [39545.05,39545.05] lookup 2577588.87 [2577588.87,2577588.87] md_getattr 1247558.37 [1247558.37,1247558.37] setxattr 57218.08 [57218.08,57218.08] destroy 33400.55 [33400.55,33400.55]

done!

This is a previously known problem discussed in LU-2442. The "on-by-default" quota accounting introduced serialization in the quota layer that broke the SMP scaling optimizations done in the 2.3 code. This wasn't fixed until v2_3_63_0-35-g6df197d (http://review.whamcloud.com/5010), so this will hide any regressions in the metadata performance when testing on a faster system, unless the quota feature is disabled on the MDS (tune2fs -O ^quota /dev/mdt).

It may well be that there is still a performance impact from the "on-by-default" quota accounting, which is worthwhile to test before trying to find some other cause for this regression.

Niu

Are you able to advise on the reported drop due to the quotas landing?

Alex/Nasf

Any comments?

Thanks

Peter

Unique direcotry

| version | commit | Dir creation | Dir stat | Dir removal | File creation | File stat | File removal |

| v2_3_50_0-143 | 9ddf386035767a96b54e21559f3ea0be126dc8cd | 22167 | 194845 | 32910 | 50090 | 132461 | 36762 |

| v2_3_50_0-142 | c61d09e9944ae47f68eb159224af7c5456cc180a | 21835 | 203123 | 46178 | 45941 | 147281 | 67253 |

| v2_3_50_0-141 | 48bad5d9db9baa7bca093de5c54294adf1cf8303 | 20542 | 202014 | 48271 | 46264 | 142450 | 66267 |

| 2.3.50 | 04ec54ff56b83a9114f7a25fbd4aa5f65e68ef7a | 24727 | 226128 | 34064 | 27859 | 124521 | 34591 |

| 2.3 | ee695bf0762f5dbcb2ac6d96354f8d01ad764903 | 23565 | 222482 | 44421 | 48333 | 125765 | 76709 |

It seems that there are several regression points between 2.3 and 2.4, but at least on my small client testing(tested mdtest on 16 clients, 32 process), commit 9ddf386035767a96b54e21559f3ea0be126dc8cd might be one of regression point for unlink operation. (I collected more data at commit points, but this is one of point big differnce between commits.

I'm still working to verify wether this is exactly point. will run on large number of client environment.

Niu's patch is at http://review.whamcloud.com/6440.

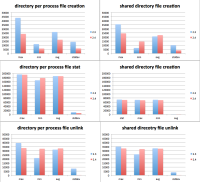

Minh, would you be able to run another set of tests with the latest patch applied, and produce a graph like:

https://jira.hpdd.intel.com/secure/attachment/12415/mdtest_create.png

so it is easier to see what the differences are? Presumably with 5 runs it would be useful to plot the standard deviation, since I see from the text results you posted above that the performance can vary dramatically between runs.