dqget()/dqput() is mainly to get/drop reference on the in-memory per-id data of dquot, and it acquires global locks like lock dq_list_lock & dq_state_lock, (since it will lookup the dquot list and do some state checking) so contention on those global locks could be severe in the test case. If we can replace them with RCU or read/write lock, things will be better.

I heard from Lai that there were some old patches which tried to remove those global locks, but it didn't gain much interest of community and not reviewed. Lai, could you comment on this?

Regarding the quota record commit (mark_dquot_dirty() -> ext4_mark_dquot_dirty() -> ext4_write_dquot() -> dquot_commit(), which should happen along with each transaction), it does require global locks: dqio_mutex & dq_list_lock, but surprisingly, I didn't see it in the top samples of oprofile, it might just because the dqget()/dqput() calls are much more than dquot commit calls? Once we resolved the bottleneck in dqget()/dqput(), the contention in dquot commit could probably come to light.

Bug

Major

LU-3396 Disable quota by default for 2.4

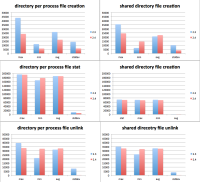

performance data for the patch