I did few tests on Rosso cluster, the result is similar to what we got in LU-2442, except that the performance drop problem (with 32 threads) showed in LU-2442 (with LU-2442 patch) is resolved:

patched:

mdtest-1.8.3 was launched with 32 total task(s) on 1 nodes

Command line used: mdtest -d /mnt/ldiskfs -i 10 -n 25000 -u -F -r

Path: /mnt

FS: 19.7 GiB Used FS: 17.5% Inodes: 1.2 Mi Used Inodes: 4.6%

32 tasks, 800000 files

SUMMARY: (of 10 iterations)

Operation Max Min Mean Std Dev

--------- --- --- ---- -------

File creation : 0.000 0.000 0.000 0.000

File stat : 0.000 0.000 0.000 0.000

File removal : 4042.032 1713.613 2713.827 698.243

Tree creation : 0.000 0.000 0.000 0.000

Tree removal : 2.164 1.861 2.020 0.088

-- finished at 07/07/2013 20:37:36 --

CPU: Intel Sandy Bridge microarchitecture, speed 2.601e+06 MHz (estimated)

Counted CPU_CLK_UNHALTED events (Clock cycles when not halted) with a unit mask of 0x00 (No unit mask) count 100000

samples % app name symbol name

10826148 11.6911 vmlinux schedule

7089656 7.6561 vmlinux update_curr

6432166 6.9461 vmlinux sys_sched_yield

4384494 4.7348 vmlinux __audit_syscall_exit

4088507 4.4152 libc-2.12.so sched_yield

3441346 3.7163 vmlinux system_call_after_swapgs

3337224 3.6038 vmlinux put_prev_task_fair

3244213 3.5034 vmlinux audit_syscall_entry

2844216 3.0715 vmlinux thread_return

2702323 2.9182 vmlinux rb_insert_color

2636798 2.8475 vmlinux native_read_tsc

2234644 2.4132 vmlinux sched_clock_cpu

2182744 2.3571 vmlinux native_sched_clock

2175482 2.3493 vmlinux hrtick_start_fair

2152807 2.3248 vmlinux pick_next_task_fair

2130024 2.3002 vmlinux set_next_entity

2099576 2.2673 vmlinux rb_erase

1790101 1.9331 vmlinux update_stats_wait_end

1777328 1.9193 vmlinux mutex_spin_on_owner

1701672 1.8376 vmlinux sysret_check

unpatched:

mdtest-1.8.3 was launched with 32 total task(s) on 1 nodes

Command line used: mdtest -d /mnt/ldiskfs -i 10 -n 25000 -u -F -r

Path: /mnt

FS: 19.7 GiB Used FS: 17.8% Inodes: 1.2 Mi Used Inodes: 4.6%

32 tasks, 800000 files

SUMMARY: (of 10 iterations)

Operation Max Min Mean Std Dev

--------- --- --- ---- -------

File creation : 0.000 0.000 0.000 0.000

File stat : 0.000 0.000 0.000 0.000

File removal : 2816.345 1673.085 2122.347 342.119

Tree creation : 0.000 0.000 0.000 0.000

Tree removal : 2.296 0.111 1.361 0.866

-- finished at 07/07/2013 21:11:03 --

CPU: Intel Sandy Bridge microarchitecture, speed 2.601e+06 MHz (estimated)

Counted CPU_CLK_UNHALTED events (Clock cycles when not halted) with a unit mask of 0x00 (No unit mask) count 100000

samples % image name app name symbol name

23218790 18.3914 vmlinux vmlinux dqput

9549739 7.5643 vmlinux vmlinux __audit_syscall_exit

9116086 7.2208 vmlinux vmlinux schedule

8290558 6.5669 vmlinux vmlinux dqget

5576620 4.4172 vmlinux vmlinux update_curr

5343755 4.2327 vmlinux vmlinux sys_sched_yield

3251018 2.5751 libc-2.12.so libc-2.12.so sched_yield

2907579 2.3031 vmlinux vmlinux system_call_after_swapgs

2854863 2.2613 vmlinux vmlinux put_prev_task_fair

2793392 2.2126 vmlinux vmlinux audit_syscall_entry

2723949 2.1576 vmlinux vmlinux kfree

2551007 2.0206 vmlinux vmlinux mutex_spin_on_owner

2406364 1.9061 vmlinux vmlinux rb_insert_color

2321179 1.8386 vmlinux vmlinux thread_return

2184031 1.7299 vmlinux vmlinux native_read_tsc

2002277 1.5860 vmlinux vmlinux dquot_mark_dquot_dirty

1990135 1.5764 vmlinux vmlinux native_sched_clock

1970544 1.5608 vmlinux vmlinux set_next_entity

1967852 1.5587 vmlinux vmlinux pick_next_task_fair

1966282 1.5575 vmlinux vmlinux dquot_commit

1966271 1.5575 vmlinux vmlinux sysret_check

1919524 1.5204 vmlinux vmlinux unroll_tree_refs

1811281 1.4347 vmlinux vmlinux sched_clock_cpu

1810278 1.4339 vmlinux vmlinux rb_erase

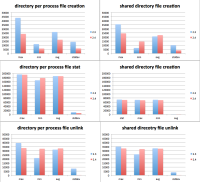

The unlink rate speed increased ~28% with 32 threads, and the oprofile data shows contention on dq_list_lock in dqput() is alleviated a lot.

I think we should take this patch as a supplement of fix LU-2442.

Bug

Major

LU-3396 Disable quota by default for 2.4

There are two core kernel patches in the RHEL series on master that improve quota performance - both start with "quota".