Details

-

Bug

-

Resolution: Duplicate

-

Blocker

-

Lustre 2.6.0

-

3

-

13338

Description

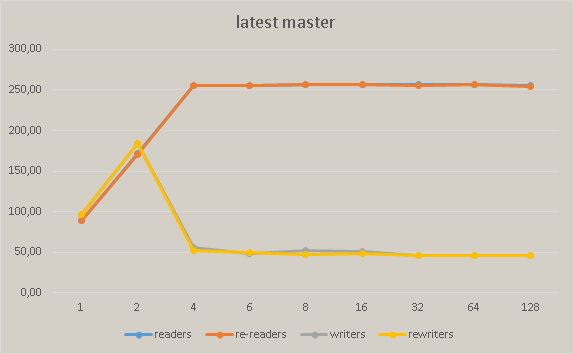

After commit 586e95a5b3f7b9525d78e7efc9f2949387fc9d54 we have significant performance degradation in master. The following pictures show the regress measured on Xeon Phi:

Attachments

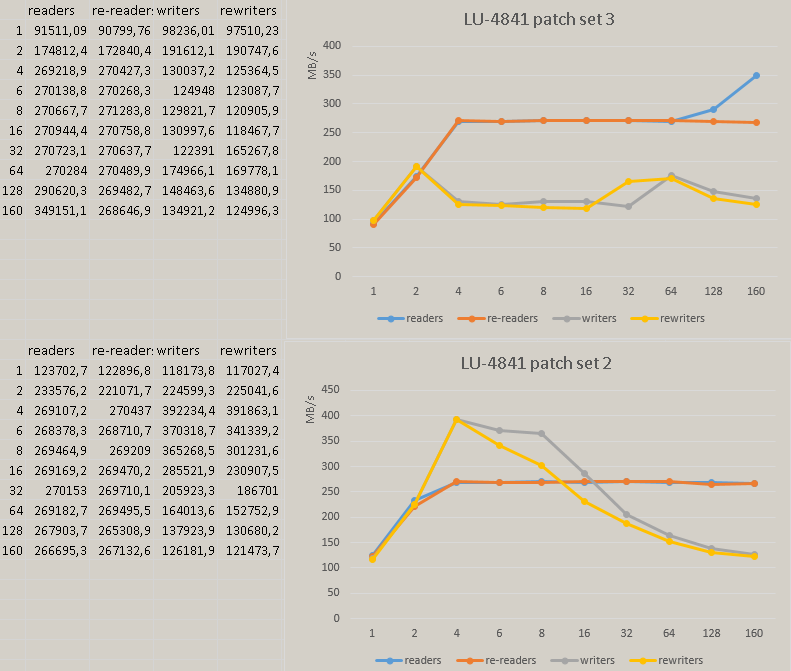

- lu-4841.png

- 42 kB

- lu-4841-1.png

- 46 kB

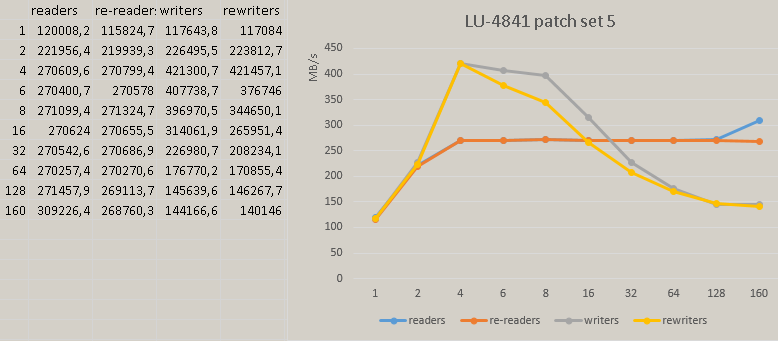

- lu-4841-5.png

- 24 kB

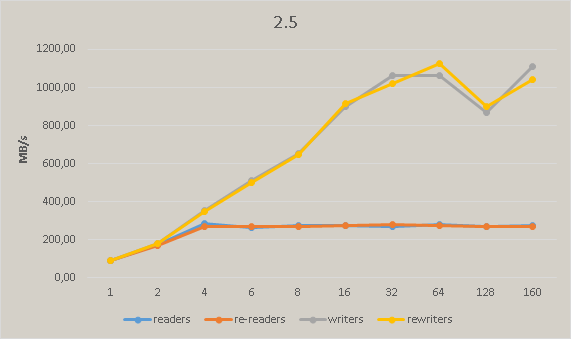

- perf_2.5.png

- 15 kB

- perf_master.png

- 13 kB

Activity

Did you, or did you not, do the following?

Remove kernel NFS unstable pages tracking

That was a critical part of the design. Can you explain how you fixed the memory management issues without that part of the design?

And why would it be correct to make unstable pages part of the page LRU? Those pages cannot be freed. They are still in the limbo state where the server has acknowledged them, but not necessarily yet committed them to disk. When memory needs to be freed, we don't send them again, so there is no reason to walk them as part of the LRU.

Yes, I agree that it's more reasonable to take UNSTABLE pages out of LRU. However, if we didn't do that, we need to find the corresponding cl_page in brw_commit() callback and that will use an atomic operation. Actually the current mechanism is not that bad because usually there are only small part of pages are in UNSTABLE state, and those pages should stay at the tail LRU list; therefore, they shouldn't be scanned at all.

One of my concerns with using UNSTABLE pages is that it will make OST to commit transactions frequently. Therefore a problematic client will send lots of SOFT_SYNC RPCs to OSTs it talks to, which will degrade system performance significantly.

Anyway, this patch didn't remove the feature of UNSTABLE pages tracking. Users can still enable it if performance is not a problem for them.

Folks, can someone explain to me the rationale behind these changes in change 10003?

1. Remove kernel NFS unstable pages tracking because it killed performance

2. Track unstable pages as part of LRU cache. Otherwise Lustre can use much more memory than max_cached_mb

3. Remove obd_unstable_pages tracking to avoid using global atomic counter

Was not the entire point of the unstable page tracking pages to use the NFS unstable pages tracking? The idea was that it gives the Linux kernel a back pressure mechanism when memory is low. max_cached_mb has absolutely no regard for the actual memory usage of a running system; it only works in its own little lustre world.

And why would it be correct to make unstable pages part of the page LRU? Those pages cannot be freed. They are still in the limbo state where the server has acknowledged them, but not necessarily yet committed them to disk. When memory needs to be freed, we don't send them again, so there is no reason to walk them as part of the LRU.

I am rather concerned that we have thrown away correctness in pursuit of performance.

Here is the back-ported patch for Lustre b2_5 branch: http://review.whamcloud.com/12615

The patch depends on the patches for LU-3274 and LU-2139 on Lustre b2_5 branch.

Patch landed to Master. Please reopen ticket if more work is needed.

This is performance test of patch set 5.

Writers stack:

[<ffffffffa09ba014>] osc_enter_cache+0x48d/0x999 [osc] [<ffffffffa09b32f8>] osc_queue_async_io+0xa58/0x1780 [osc] [<ffffffffa099e1d7>] osc_page_cache_add+0x47/0x120 [osc] [<ffffffffa09a54fa>] osc_io_commit_async+0x10a/0x3b0 [osc] [<ffffffffa03242f7>] cl_io_commit_async+0x77/0x130 [obdclass] [<ffffffffa081d581>] lov_io_commit_async+0x271/0x4c0 [lov] [<ffffffffa03242f7>] cl_io_commit_async+0x77/0x130 [obdclass] [<ffffffffa08f38d6>] vvp_io_write_commit+0xf6/0x7e0 [lustre] [<ffffffffa08f40d4>] vvp_io_write_start+0x114/0x390 [lustre] [<ffffffffa03255c5>] cl_io_start+0x65/0x130 [obdclass] [<ffffffffa0328a74>] cl_io_loop+0xb4/0x1b0 [obdclass] [<ffffffffa0893be9>] ll_file_io_generic+0x3b9/0x860 [lustre] [<ffffffffa08942f6>] ll_file_aio_write+0xd6/0x1e0 [lustre] [<ffffffffa089451c>] ll_file_write+0x11c/0x230 [lustre] [<ffffffff810dfb9d>] vfs_write+0xac/0xf3 [<ffffffff810dfd8c>] sys_write+0x4a/0x6e [<ffffffff81002a6b>] system_call_fastpath+0x16/0x1b

For pure master client and client with patch set 3 the stacks are following:

Writers stack:

[<ffffffff810a86dd>] balance_dirty_pages_ratelimited_nr+0x329/0x3a4 [<ffffffff810a2dc1>] generic_file_buffered_write+0x975/0xa9e [<ffffffff810a3129>] __generic_file_aio_write+0x23f/0x270 [<ffffffff810a31b2>] generic_file_aio_write+0x58/0xaa [<ffffffffa08f710d>] vvp_io_write_start+0xfd/0x390 [lustre] [<ffffffffa03255c5>] cl_io_start+0x65/0x130 [obdclass] [<ffffffffa0328a74>] cl_io_loop+0xb4/0x1b0 [obdclass] [<ffffffffa0896be9>] ll_file_io_generic+0x3b9/0x860 [lustre] [<ffffffffa08972f6>] ll_file_aio_write+0xd6/0x1e0 [lustre] [<ffffffffa089751c>] ll_file_write+0x11c/0x230 [lustre] [<ffffffff810dfb9d>] vfs_write+0xac/0xf3 [<ffffffff810dfd8c>] sys_write+0x4a/0x6e [<ffffffff81002a6b>] system_call_fastpath+0x16/0x1b

Readers stack:

[<ffffffff8109f851>] sync_page+0x4d/0x51 [<ffffffff810a012f>] sync_page_killable+0xe/0x3b [<ffffffff8109f788>] __lock_page_killable+0x66/0x68 [<ffffffff810a1c2f>] generic_file_aio_read+0x657/0x864 [<ffffffffa08f5216>] vvp_io_read_start+0x2f6/0x460 [lustre] [<ffffffffa03255c5>] cl_io_start+0x65/0x130 [obdclass] [<ffffffffa0328a74>] cl_io_loop+0xb4/0x1b0 [obdclass] [<ffffffffa0896be9>] ll_file_io_generic+0x3b9/0x860 [lustre] [<ffffffffa0897703>] ll_file_aio_read+0xd3/0x1e0 [lustre] [<ffffffffa089792c>] ll_file_read+0x11c/0x230 [lustre] [<ffffffff810dfc8d>] vfs_read+0xa9/0xf0 [<ffffffff810dfd1e>] sys_read+0x4a/0x6e [<ffffffff81002a6b>] system_call_fastpath+0x16/0x1b

This is comparison of patch set 2 and patch set 3. Also catching usual sleep stacks during test of patch set 2.

Writers stack:

[<ffffffffa09cce44>] osc_enter_cache+0x48d/0x999 [osc] [<ffffffffa09c6138>] osc_queue_async_io+0xa58/0x17b0 [osc] [<ffffffffa09b10b7>] osc_page_cache_add+0x47/0x120 [osc] [<ffffffffa09b82ea>] osc_io_commit_async+0x10a/0x3b0 [osc] [<ffffffffa0450b17>] cl_io_commit_async+0x77/0x130 [obdclass] [<ffffffffa082f581>] lov_io_commit_async+0x271/0x4c0 [lov] [<ffffffffa0450b17>] cl_io_commit_async+0x77/0x130 [obdclass] [<ffffffffa0905796>] vvp_io_write_commit+0xf6/0x7e0 [lustre] [<ffffffffa0905fb6>] vvp_io_write_start+0x136/0x370 [lustre] [<ffffffffa0451de5>] cl_io_start+0x65/0x130 [obdclass] [<ffffffffa0455294>] cl_io_loop+0xb4/0x1b0 [obdclass] [<ffffffffa08ab1d8>] ll_file_io_generic+0x238/0x6d0 [lustre] [<ffffffffa08ab8f5>] ll_file_aio_write+0xe5/0x1f0 [lustre] [<ffffffffa08abb30>] ll_file_write+0x130/0x240 [lustre] [<ffffffff810dfb9d>] vfs_write+0xac/0xf3 [<ffffffff810dfd8c>] sys_write+0x4a/0x6e [<ffffffff81002a6b>] system_call_fastpath+0x16/0x1b

Readers stack:

[<ffffffff8109f851>] sync_page+0x4d/0x51 [<ffffffff810a012f>] sync_page_killable+0xe/0x3b [<ffffffff8109f788>] __lock_page_killable+0x66/0x68 [<ffffffff810a1c2f>] generic_file_aio_read+0x657/0x864 [<ffffffffa0904066>] vvp_io_read_start+0x2f6/0x460 [lustre] [<ffffffffa0451de5>] cl_io_start+0x65/0x130 [obdclass] [<ffffffffa0455294>] cl_io_loop+0xb4/0x1b0 [obdclass] [<ffffffffa08ab1d8>] ll_file_io_generic+0x238/0x6d0 [lustre] [<ffffffffa08abd22>] ll_file_aio_read+0xe2/0x1f0 [lustre] [<ffffffffa08abf60>] ll_file_read+0x130/0x240 [lustre] [<ffffffff810dfc8d>] vfs_read+0xa9/0xf0 [<ffffffff810dfd1e>] sys_read+0x4a/0x6e [<ffffffff81002a6b>] system_call_fastpath+0x16/0x1b

I didn't remove that code but just make it optional, there is a lprocfs entry to control NFS unstable pages tracking, and it's off by default.