Details

-

Bug

-

Resolution: Fixed

-

Major

-

Lustre 2.4.0

-

2_3_49_92_1-llnl

-

3

-

3127

Description

We've been seeing strange caching behavior on our PPC IO nodes, eventually resulting in OOM events. This is particularly harmful for us because there are critical system components running in user space on these nodes, forcing us to run with "panic_on_oom" enabled.

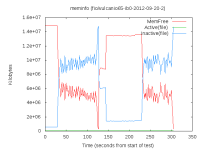

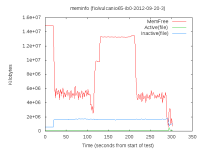

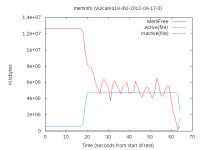

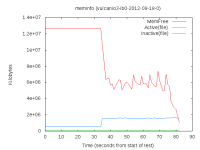

We see a large amount of "Active File" pages as reported by /proc/vmstat and /proc/meminfo which spikes during Lustre IOR jobs. For the test I am running that is unusual since I'm not running any executables out of Lustre, it should only be "inactive" IOR data accumulating in the page cache as a result of the Lustre IO. The really strange thing is, prior to testing Orion rebased code, "Active Files" would sometimes stay low (in the 100's Meg range) and sometimes it would grow very large (in the 5 Gig range). It's hard to tell if the variation still exists in the rebased code because the OOM events are hitting more frequently, basically every time I run an IOR.

We also see a large amount of "Inactive File" pages which we believe should be limited by the patch we carry from LU-744, but doesn't seem to be the case:

commit 98400981e6d6e5707233be2c090e4227a77e2c46

Author: Jinshan Xiong <jinshan.xiong@whamcloud.com>

Date: Tue May 15 20:11:37 2012 -0700

LU-744 osc: add lru pages management - new RPC

Add a cache management at osc layer, this way we can control how much

memory can be used to cache lustre pages and avoid complex solution

as what we did in b1_8.

In this patch, admins can set how much memory will be used for caching

lustre pages per file system. A self-adapative algorithm is used to

balance those budget among OSCs.

Signed-off-by: Jinshan Xiong <jinshan.xiong@intel.com>

Change-Id: I76c840aef5ca9a3a4619f06fcaee7de7f95b05f5

Revision-Id: 21

From what I can tell, Lustre is trying to limit the cache to the value we are setting 4G. When I dump the lustre page cache I roughly see 4G worth of pages, but the number of pages listed does not reflect the values seen in vmstat and meminfo.

So I have a few questions which I'd like to get an answer to:

1. Why are Lustre pages being marked as "referenced" and moved to the

Active list in the first place? Without any running executables

coming from Lustre I would not expect this to happen.

2. Why more "Inactive File" pages are accumulating on the system past

the 4G limit we are trying to set within Lustre?

3. Why these "Inactive File" pages are unable to be reclaimed when we

hit a low memory situation? Ultimately resulting in an out of memory

event and panic_on_oom triggering. This _might_ be related to (1)

above.

I added a systemtap script to disable the panic_on_oom flag and dump the Lustre page cache, /proc/vmstat, and /proc/meminfo file to try and gain some understanding into the problem. I'll upload those files as attachments in case they prove useful.

Attachments

Issue Links

- is blocked by

-

LU-3274 osc_cache.c:1774:osc_dec_unstable_pages()) ASSERTION( atomic_read(&cli->cl_cache->ccc_unstable_nr) >= 0 ) failed

-

- Resolved

-

-

LU-3277 LU-2139 may cause the performance regression

-

- Resolved

-

- is duplicated by

-

LU-70 Lustre client OOM with async journal enabled

-

- Resolved

-

- is related to

-

LU-5483 recovery-mds-scale test failover_mds: oom failure on client

-

- Reopened

-

-

LU-2576 Hangs in osc_enter_cache due to dirty pages not being flushed

-

- Resolved

-

-

LU-3321 2.x single thread/process throughput degraded from 1.8

-

- Resolved

-

-

LU-3277 LU-2139 may cause the performance regression

-

- Resolved

-

-

LU-3910 Interop 2.4.0<->2.5 failure on test suite parallel-scale-nfsv4 test_iorssf: MDS OOM

-

- Resolved

-