Details

-

Bug

-

Resolution: Fixed

-

Critical

-

None

-

3

-

9223372036854775807

Description

As seen in tickets like LU-5727, we currently rely almost entirely on the good aggregate behavior of the lustre clients to avoid memory exhaustion on the MDS (and other servers, no doubt).

We require the servers, the MDS in particular, to instead limit ldlm lock usage to something reasonable to avoid OOM conditions on their own. It is not good design to leave the MDS's memory usage entirely up to the very careful administrative limiting of ldlm lock usage limits across all of the client nodes.

Consider that some sites have many thousands of clients across many clusters where such careful balancing and coordinated client limits may be difficult to achieve. Consider also WAN usages, where some clients might not ever reside at the same organization as the servers. Consider also bugs in the client, again like LU-5727.

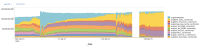

See also the attached graph showing MDS memory usage. Clearly the ldlm lock usage grows without bound, and other parts of the kernel memory usage are put under undue pressure. 70+ GiB of ldlm lock usage is not terribly reasonable for our setup.

Some might argue that the SLV code needs to be fixed, and I have no argument against pursuing that work. That could certainly be worked in some other ticket.

But even if SLV is fixed, we still require enforcement of good memory usage on the server side. There will always be client bugs or misconfiguration on clients and the server OOMing is not a reasonable response to those issues.

I would propose a configurable hard limit on the number of locks (or space used by locks) on the server side.

I am open to other solutions, of course.

Attachments

Issue Links

- is related to

-

LU-7266 Fix LDLM pool to make LRUR working properly

-

- Open

-

-

LU-6390 lru_size on the OSC is not honored

-

- Resolved

-

-

LU-6929 typo in cfs_hash_for_each_relax()

-

- Resolved

-

-

LU-1520 client fails MDS connection and stack threads on another client

-

- Resolved

-

-

LU-6775 Reduce memory footprint of ldlm_lock and ldlm_resource

-

- Resolved

-

-

LU-11672 improving lru_max_age policy when lru resize is disabled

-

- Resolved

-

-

LU-14221 Client hangs when using DoM with a fixed mdc lru_size

-

- Closed

-

-

LU-11509 LDLM: replace client lock LRU with improved cache algorithm

-

- Open

-

-

LU-14858 kernfs tree to dump/traverse ldlm lock resources for debug

-

- Open

-

-

LU-14859 cancel client DLM locks from the server

-

- Open

-

-

LU-14517 Decrease default lru_max_age value

-

- Resolved

-

- is related to

-

LU-5727 MDS OOMs with 2.5.3 clients and lru_size != 0

-

- Resolved

-

-

LU-8209 glimpse lock request does not engage ELC to drop unneeded locks

-

- Resolved

-

-

LU-17428 reduce default value for lru_max_age to 300s

-

- Resolved

-

Oleg Drokin (oleg.drokin@intel.com) merged in patch http://review.whamcloud.com/14931/

Subject:

LU-6529ldlm: reclaim granted locks defensivelyProject: fs/lustre-release

Branch: master

Current Patch Set:

Commit: fe60e0135ee2334440247cde167b707b223cf11d