Details

-

Bug

-

Resolution: Fixed

-

Critical

-

Lustre 2.1.0, Lustre 2.2.0, Lustre 2.4.0, Lustre 1.8.6

-

3

-

5914

Description

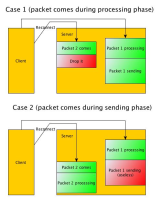

While originally this was a useful workaround, it created a lot of other unintended problems.

This code must be disabled and instead we just should disable handling several duplicate requests at the same time.

Attachments

Issue Links

- duplicates

-

LU-7 Reconnect server->client connection

-

- Resolved

-

- is related to

-

LU-2621 SIngle client timeout hangs MDS -related to LU-793

-

- Resolved

-

-

LU-4349 conf-sanity test_47: test failed to respond and timed out

-

- Resolved

-

-

LU-4458 Interop 2.5.0<->2.6 failure on test suite recovery-small test_9

-

- Resolved

-

-

LU-18072 Lock cancel resending overwhelms ldlm canceld thread

-

- Resolved

-

-

LU-7 Reconnect server->client connection

-

- Resolved

-

-

LU-4359 1.8.9 clients has endless bulk IO timeouts with 2.4.1 servers

-

- Resolved

-

-

LU-2429 easy to find bad client

-

- Resolved

-

-

LU-4480 cfs_fail_timeout id 214 sleeping for -1000ms

-

- Closed

-

- Trackbacks

-

![[Confluence: Engineering] [Confluence: Engineering]](/images/icons/generic_link_16.png) Lustre 1.8.x known issues tracker

While testing against Lustre b18 branch, we would hit known bugs which were already reported in Lustre Bugzilla https://bugzilla.lustre.org/. In order to move away from relying on Bugzilla, we would create a JIRA

Lustre 1.8.x known issues tracker

While testing against Lustre b18 branch, we would hit known bugs which were already reported in Lustre Bugzilla https://bugzilla.lustre.org/. In order to move away from relying on Bugzilla, we would create a JIRA

patch was refreshed http://review.whamcloud.com/#/c/4960/. I doesn't handle bulk request for now, this will be solved in the following patch.