Details

-

Bug

-

Resolution: Won't Fix

-

Minor

-

None

-

Lustre 2.4.2

-

centos 6.5

-

3

-

14543

Description

After upgrading a system from

lustre 2.4.0-1 / zfs-0.6.2-1 to

lustre 2.4.2-1 / zfs-0.6.3-1

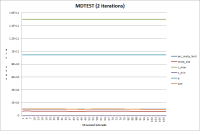

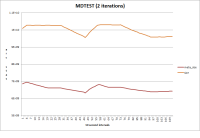

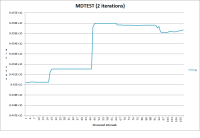

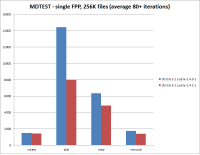

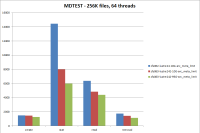

mdtest shows a signficantly lower stat performance - about 8000 iops vs 14400

File reads and file removals are a bit worse, but not as severe. See the attached graph.

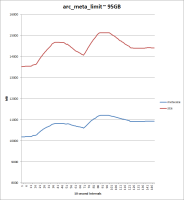

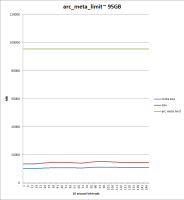

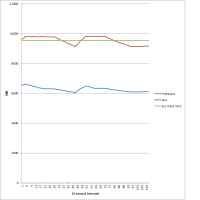

We do see other marked improvements with the upgrade, for example with system processes waiting on the MDS.

I wonder if this is some kind of expected perfromance tradeoff for the new version? I'm guessing the absolute numbers for stat are still acceptable for our workload, but it is quite a large relative difference.

Scott

Attachments

Issue Links

- is related to

-

LU-5203 Update ZFS Version to 0.6.3

-

- Resolved

-