Details

-

Bug

-

Resolution: Fixed

-

Blocker

-

Lustre 2.4.0

-

Tested on 2.3.64 and 1.8.9 clients with 4 OSS x 3 - 32 GB OST ramdisks

-

3

-

8259

Description

Single thread/process throughput on tag 2.3.64 is degraded from 1.8.9 and significantly degraded when the client hits its caching limit (llite.*.max_cached_mb). Attached graph shows lnet stats sampled every second for a single dd writing 2 - 64 GB files followed by a dropping cache and reading the same two files. The tests were not done simultaenously but the graph has them starting from the same point. It also takes a significant amount of time to drop the cache on 2.3.64.

Lustre 2.3.64

Write (dd if=/dev/zero of=testfile bs=1M)

68719476736 bytes (69 GB) copied, 110.459 s, 622 MB/s

68719476736 bytes (69 GB) copied, 147.935 s, 465 MB/s

Drop caches (echo 1 > /proc/sys/vm/drop_caches)

real 0m43.075s

Read (dd if=testfile of=/dev/null bs=1M)

68719476736 bytes (69 GB) copied, 99.2963 s, 692 MB/s

68719476736 bytes (69 GB) copied, 142.611 s, 482 MB/s

Lustre 1.8.9

Write (dd if=/dev/zero of=testfile bs=1M)

68719476736 bytes (69 GB) copied, 63.3077 s, 1.1 GB/s

68719476736 bytes (69 GB) copied, 67.4487 s, 1.0 GB/s

Drop caches (echo 1 > /proc/sys/vm/drop_caches)

real 0m9.189s

Read (dd if=testfile of=/dev/null bs=1M)

68719476736 bytes (69 GB) copied, 46.4591 s, 1.5 GB/s

68719476736 bytes (69 GB) copied, 52.3635 s, 1.3 GB/s

Attachments

Issue Links

- is related to

-

LU-2032 small random read i/o performance regression

-

- Open

-

-

LU-4201 Test failure sanityn test_51b: file size is 4096, should be 1024

-

- Resolved

-

-

LU-2622 All CPUs spinning on cl_envs_guard lock under ll_releasepage during memory reclaim

-

- Resolved

-

-

LU-4786 Apparent denial of service from client to mdt

-

- Resolved

-

-

LU-7912 Stale comment in osc_page_transfer_add

-

- Resolved

-

-

LU-2946 vvp_write_{pending|complete} should be inode based

-

- Resolved

-

- is related to

-

LU-744 Single client's performance degradation on 2.1

-

- Resolved

-

-

LU-2139 Tracking unstable pages

-

- Resolved

-

Hi Jinshan,

Here are the results of the performance measurements I have done.

Configuration

Client is a node with 2 Ivybridge sockets (24 cores, 2.7GHz), 32GB memory, 1 FDR Infiniband adapter.

OSS is a node with 2 Sandybridge sockets (16 cores, 2.2GHZ), 32GB memory, 1 FDR Infiniband adapter, with 5 OSTs devices from a disk array and 1 OST ramdisk device.

Each disk array OST reaches 900 MiB/s write and 1100 MiB/s read with obdfilter-survey.

Two Lustre versions have been tested: 2.5.57 and 1.8.8-wc1

OSS cache is disabled (writethrough_cache_enable=0 and read_cache_enable=0)

Benchmark

IOR with following options:

api=POSIX

filePerProc=1

blockSize=64G

transferSize=1M

numTasks=1

fsync=1

Server and client system cache is cleared before each write test and read test

Tests are repeated 3 times, average value is computed.

Tests are run as a standard user.

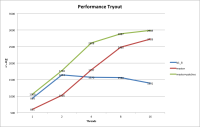

Results

With disk array OSTs, best results are achieved with a stripecount of 3.

The write performance is under the 1GiB/s performance which was my goal. Do you think this is a performance we could achieve ? What tuning would you recommand ? I will provide monitoring data in attachment for one of the lustre 2.5.57 runs.

As an element of comparison, the results with the ram device OST

Various tuning have been tested but give no improvement:

IOR transferSize, llite max_cached_mb, OSS cache enabled.

What makes a significant difference with lustre 2.5.57 is the write performance when test is run as root user, since it reaches 926 MiB/s (+4,5% compared to standard user). Should I open a separate ticket to track this difference ?

Greg.