Details

-

New Feature

-

Resolution: Fixed

-

Minor

-

Lustre 2.8.0

-

14856

Description

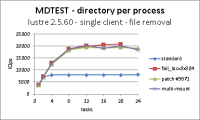

While running mdtest benchmark, I have observed that file creation and unlink operations from a single Lustre client quickly saturates to around 8000 iops: maximum is reached as soon as with 4 tasks in parallel.

When using several Lustre mount points on a single client node, the file creation and unlink rate do scale with the number of tasks, up to the 16 cores of my client node.

Looking at the code, it appears that most metadata operations are serialized by a mutex in the MDC layer.

In mdc_reint() routine, request posting is protected by mdc_get_rpc_lock() and mdc_put_rpc_lock(), where the lock is :

struct client_obd -> struct mdc_rpc_lock *cl_rpc_lock -> struct mutex rpcl_mutex.

After an email discussion with Andreas Dilger, it appears that the limitation is actually on the MDS, since it cannot handle more than a single filesystem-modifying RPC at one time. There is only one slot in the MDT last_rcvd file for each client to save the state for the reply in case it is lost.

The aim of this ticket is to implement multiple slots per client in the last_rcvd file so that several filesystem-modifying RPCs can be handled in parallel.

The single client metadata performance should be significantly improved while still ensuring a safe recovery mecanism.

Attachments

Issue Links

- is related to

-

LU-6840 update memory reply data in DNE update replay

-

- Resolved

-

-

LU-5951 sanity test_39k: mtime is lost on close

-

- Resolved

-

-

LU-6981 obd_last_committed is not updated in tgt_reply_data_init()

-

- Resolved

-

-

LU-7729 Don't return ptlrpc_error() in process_req_last_xid().

-

- Resolved

-

-

LU-7028 racer:kernel:BUG: spinlock bad magic on CPU#0

-

- Resolved

-

-

LU-7082 conf-sanity test_90b: MDT start failed

-

- Resolved

-

-

LU-7408 multislot RPC support didn't declare write for reply_data object

-

- Resolved

-

-

LU-6841 replay-single test_30: multiop 20786 failed

-

- Closed

-

-

LU-3285 Data on MDT

-

- Resolved

-

-

LU-14144 get and set Lustre module parameters via "lctl get_param/set_param"

-

- Open

-

-

LU-933 allow disabling the mdc_rpc_lock for performance testing

-

- Resolved

-

-

LU-6753 Fix several minor improvements to multislots feature

-

- Resolved

-

- is related to

-

LU-6386 lower transno may overwrite the bigger one in client last_rcvd slot

-

- Resolved

-

-

LU-7185 restore flags on ptlrpc_connect_import failure to prevent LBUG

-

- Resolved

-

-

LU-7410 After downgrade from 2.8 to 2.5.5, hit unsupported incompat filesystem feature(s) 400

-

- Resolved

-

-

LU-6864

DNE3: Support multiple modify RPCs in flight for MDT-MDT connection

LU-6864

DNE3: Support multiple modify RPCs in flight for MDT-MDT connection

-

- Resolved

-

-

LUDOC-304 Updates related to support of multiple modify RPCs in parallel

-

- Resolved

-

Activity

| Link | New: This issue is duplicated by DELL-242 [ DELL-242 ] |

| Remote Link | Original: This issue links to "Page (HPDD Community Wiki)" [ 15849 ] | New: This issue links to "Page (HPDD Community Wiki)" [ 15849 ] |

| Link | New: This issue is related to LDEV-37 [ LDEV-37 ] |

| Link | New: This issue is related to JFC-15 [ JFC-15 ] |

The multi-slots implementation introduced a regression, see

LU-5951.To get the unreplied requests by scan the sending/delayed list, current multi-slots implementation moved the xid assignment from request packing stage to request sending stage, however, that breaks the original mechanism which used to coordinate the timestamp update on OST objects (caused by some out of order operations, such as setattr, truncate and write).

To fix this regression,

LU-5951moved the xid assignment back to request packing stage, and introduced an unreplied list to track all the unreplied requests. Following is a brief description of theLU-5951patch:obd_import->imp_unreplied_list is introduced to track all the unreplied requests, and all requests in the list is sorted by xid, so that client may get the known maximal replied xid by checking the first element in the list.

obd_import->imp_known_replied_xid is introduced for sanity check purpose, it's updated along with the imp_unreplied_list.

Once a request is built, it'll be inserted into the unreplied list, and when the reply is seen by client or the request is going to be freed, the request will be removed from the list. Two tricky points are worth mentioning here:

1. Replay requests need be added back to the unreplied list before sending, instead of adding them back one by one during replay, we choose to add them back all together before replay, that'll be easier for strict sanity check and less bug prone.

2. The sanity check on server side is strengthened a lot, to satisfy the stricter check, connect & disconnect request won't carry the known replied xid anymore, see the comments in ptlrpc_send_new_req() for details.